Building a VPC with a Load Balancer and EC2 Instances using Terraform!

Journey: 📊 Community Builder 📊

Subject matter: Building on AWS

Task: Building a VPC with a Load Balancer and EC2 Instances using Terraform!

This project practices Automation and Reliability.

Using the 6 Pillars of the AWS Well-Architected Framework, Operational Excellence, Security, and Reliability will be achieved in this build.

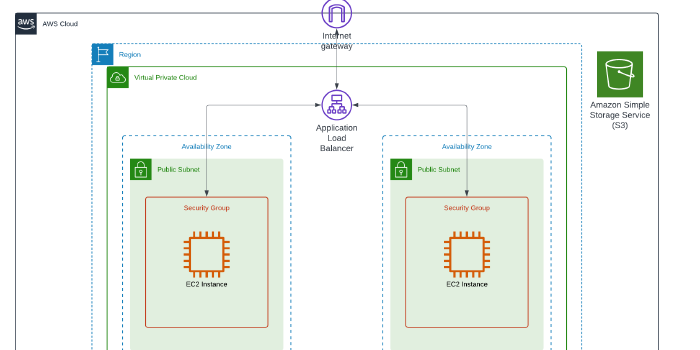

Following on from last week where I built a VPC Transit Gateway between VPCs, I wanted to switch to building an ALB which serves EC2 instances in multiple AZs.

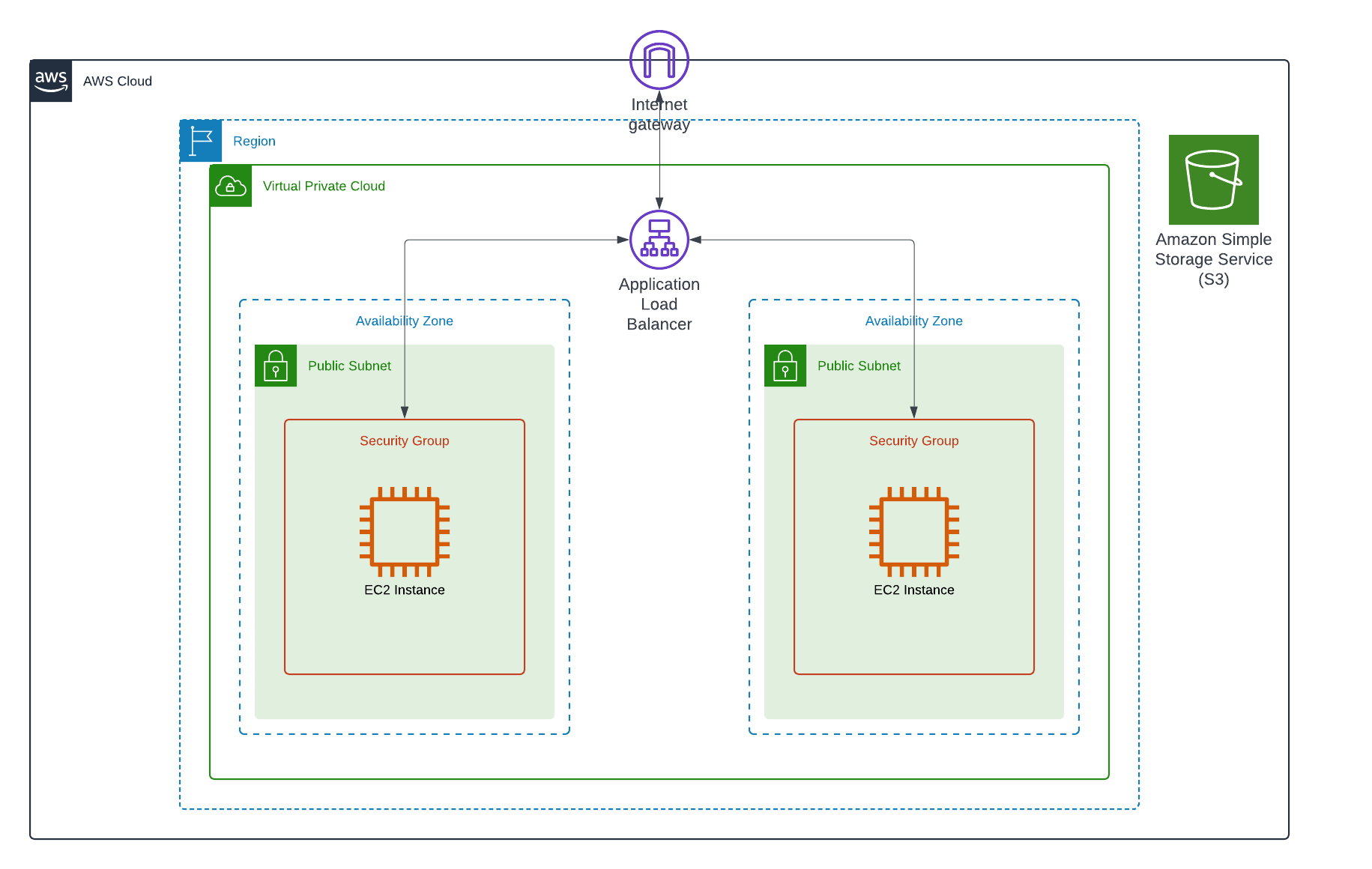

This week, I built a VPC which hosted an ALB that served two EC2 Instances. I am continuing to practice automation using Terraform.

A Load Balancer distributes incoming application traffic across multiple targets, which are two EC2 instances in this project.

Having an ALB in the environment setup ensures high availability. If we were to also add in an Auto Scaling Group, it would also increase scalability.

Resource credit: This IaC architecture was created using guidance from Sohil Doshi on Medium Here.

What did I use to build this environment?

- Visual Studio Code platform

- Terraform

- AWS CLI

What is built?

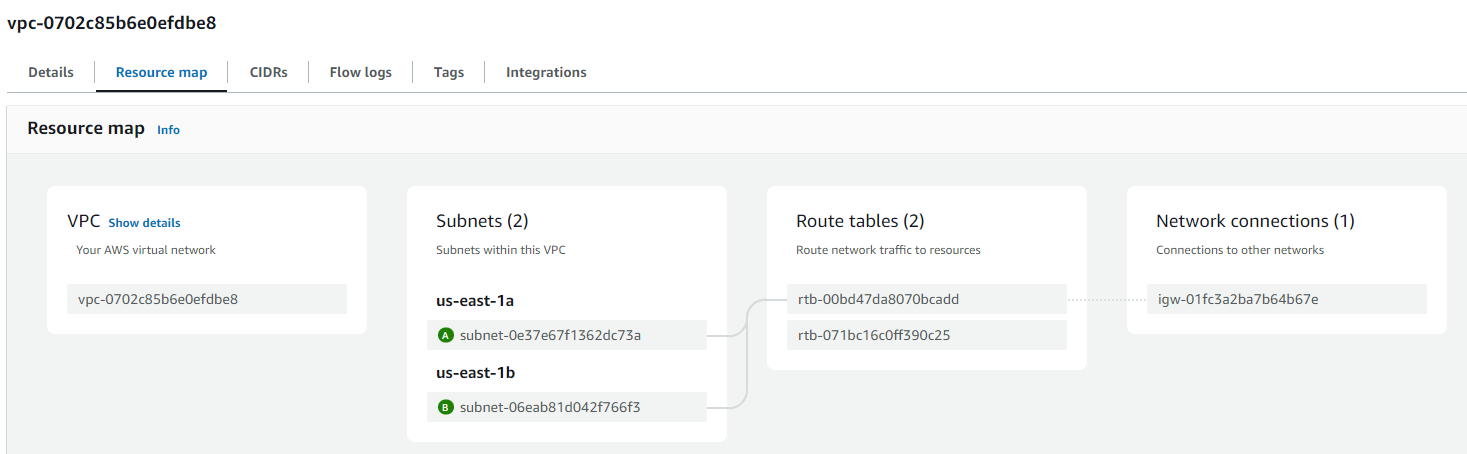

- A single VPC

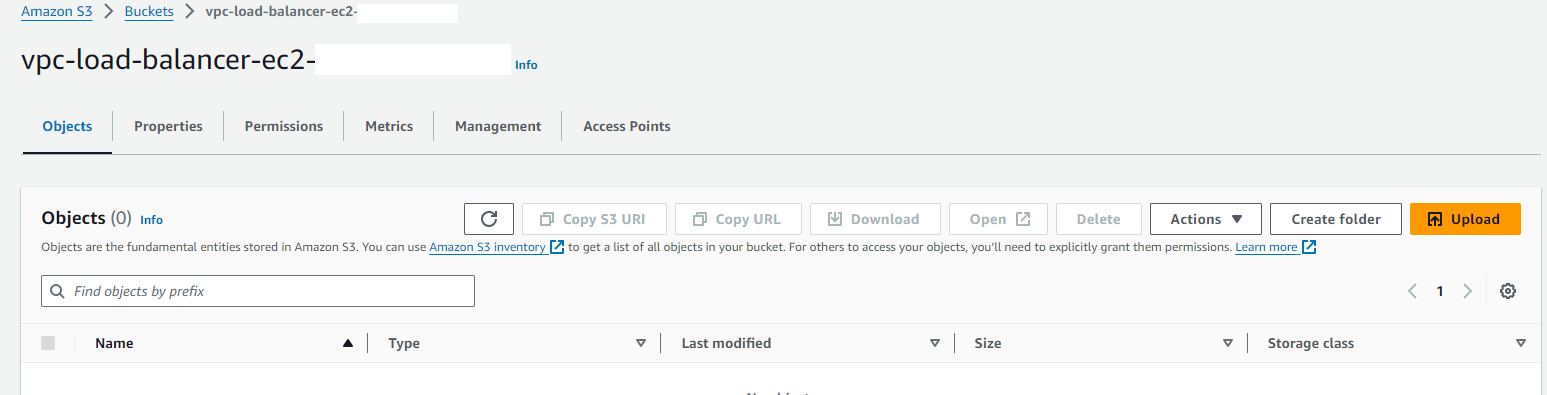

- An S3 Bucket

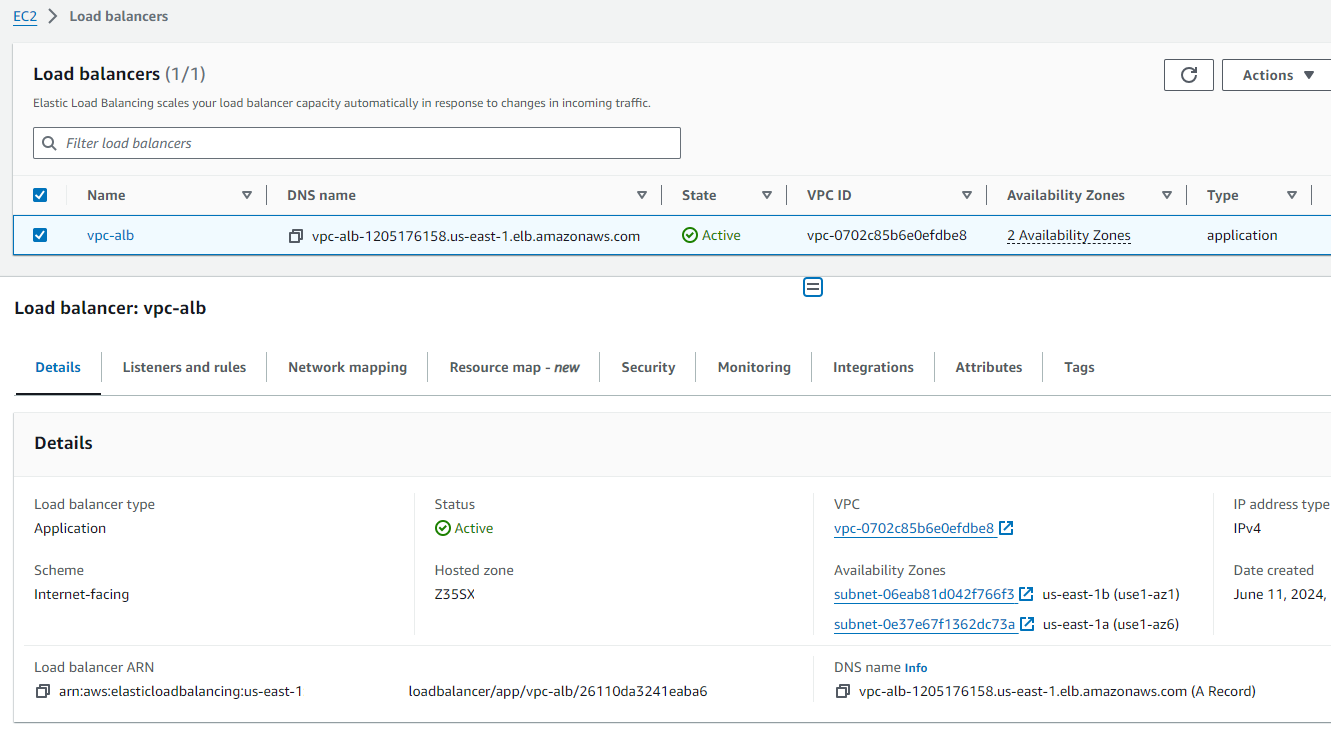

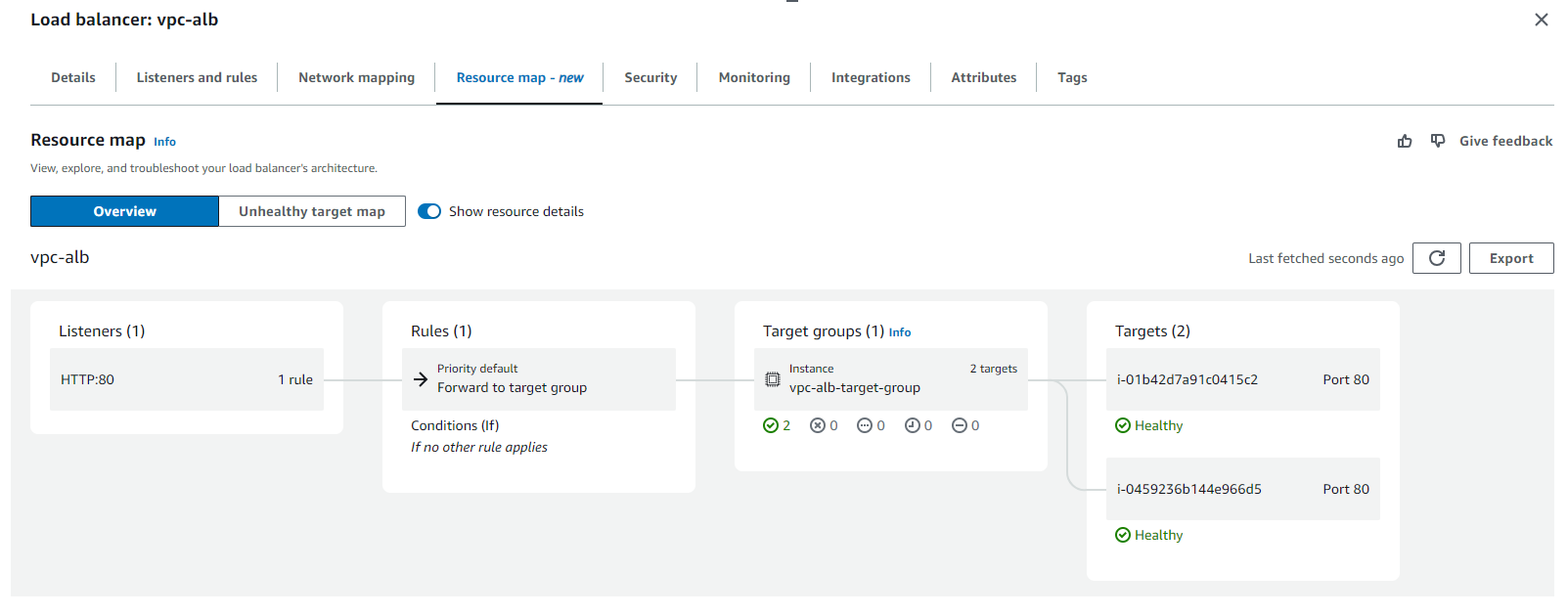

- A Load Balancer [ALB]

- ALB Listeners

- ALB Target Groups

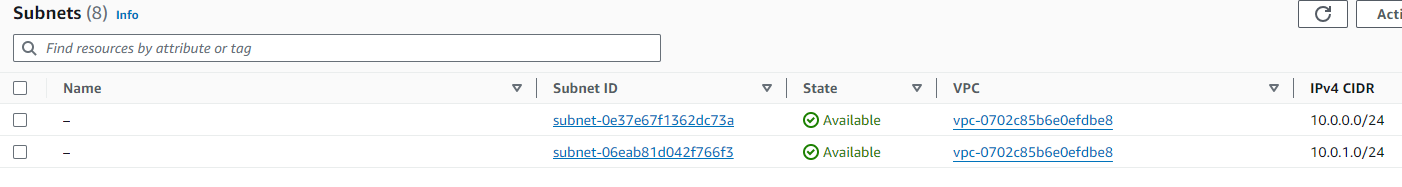

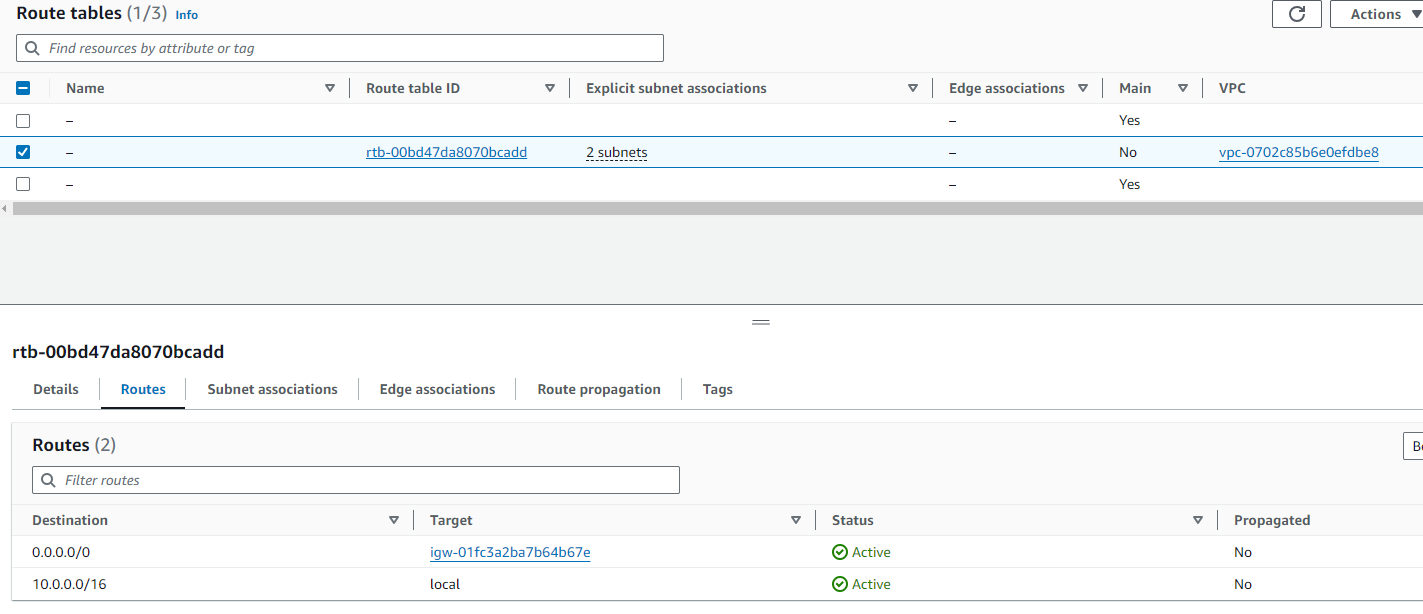

- Custom Route Tables

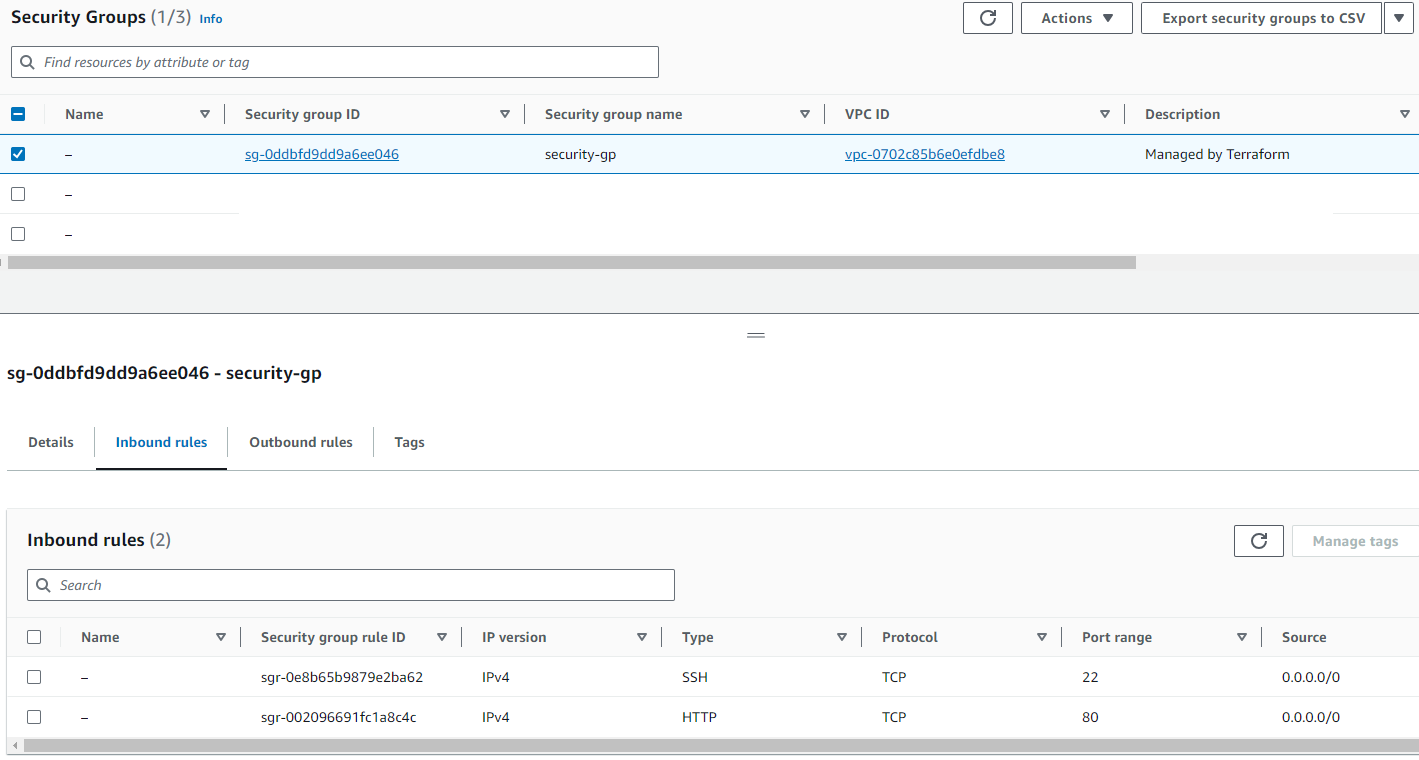

- Security Groups

- An Internet Gateway

- Two EC2 instances

- Two websites, one hosted on each instance

More information on Load Balancers can be found here: https://docs.aws.amazon.com/elasticloadbalancing/latest/application/introduction.html

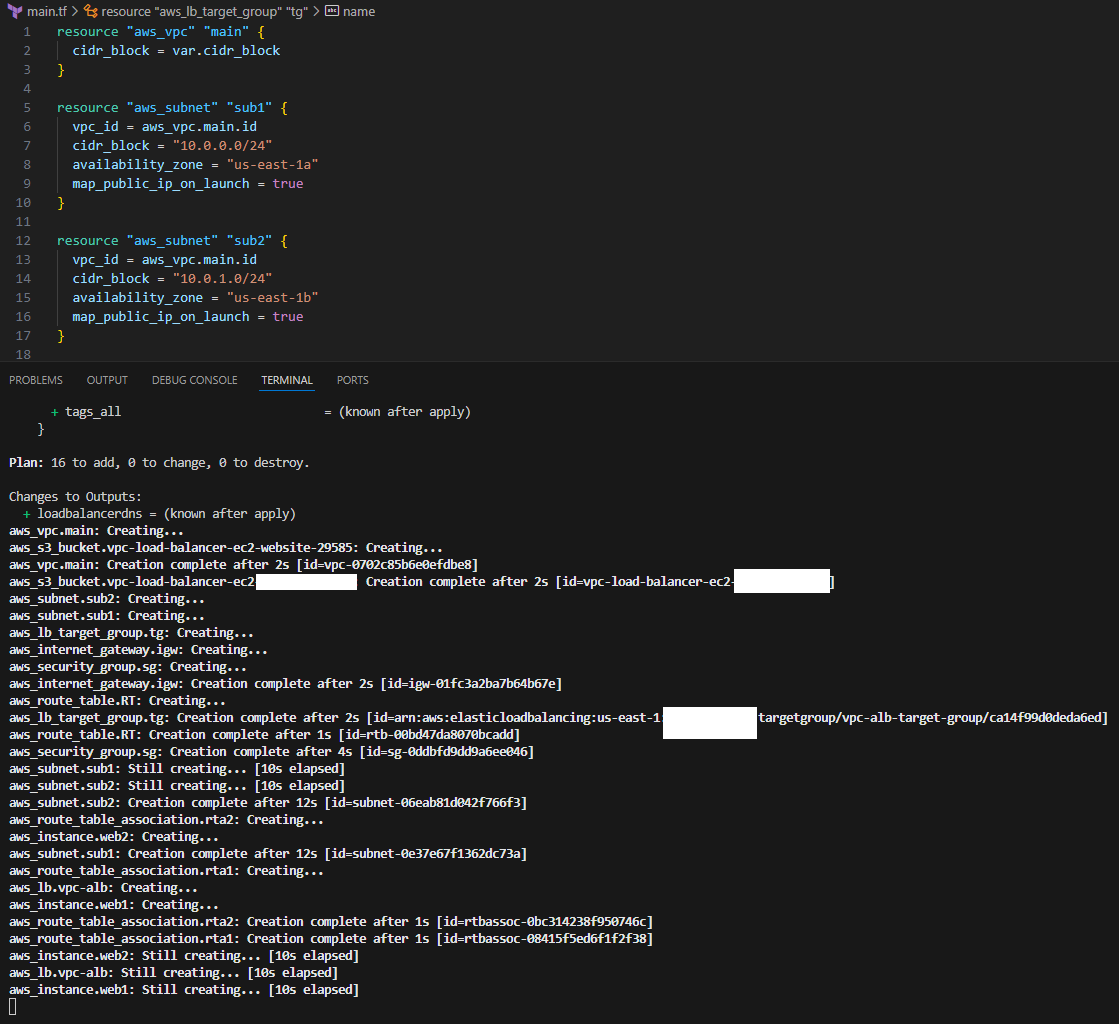

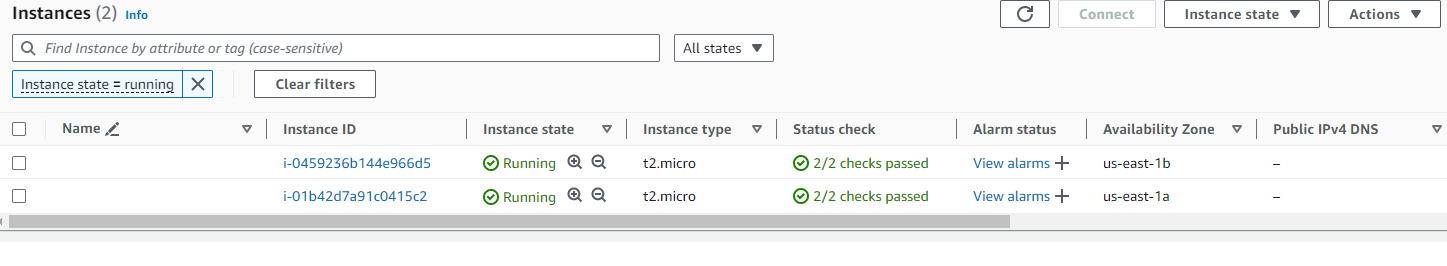

In this task, I used Terraform to create a single VPC and an S3 Bucket. I built an Application Load Balancer to distribute application traffic evenly and then hosted one EC2 instance in each Availability Zone. I used custom Route Tables and Security Groups to protect the resources in the environment and ensured the public-facing EC2 instances had an Internet Gateway so the web elements were accessible from the public internet, via the ALB.

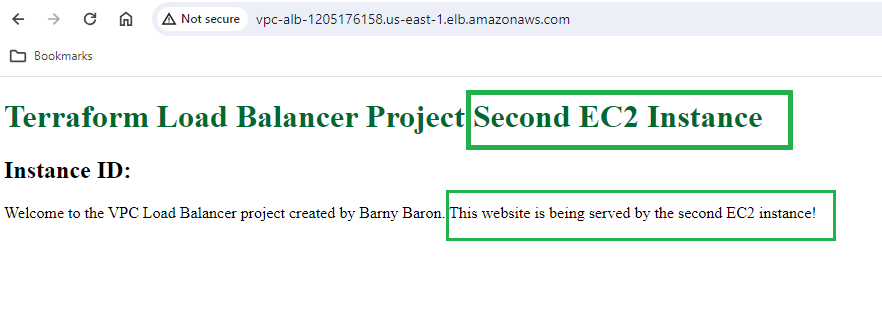

Each EC2 Instance had a website front end built using HTML that had custom identifiers to highlight the instance being used to serve web traffic.

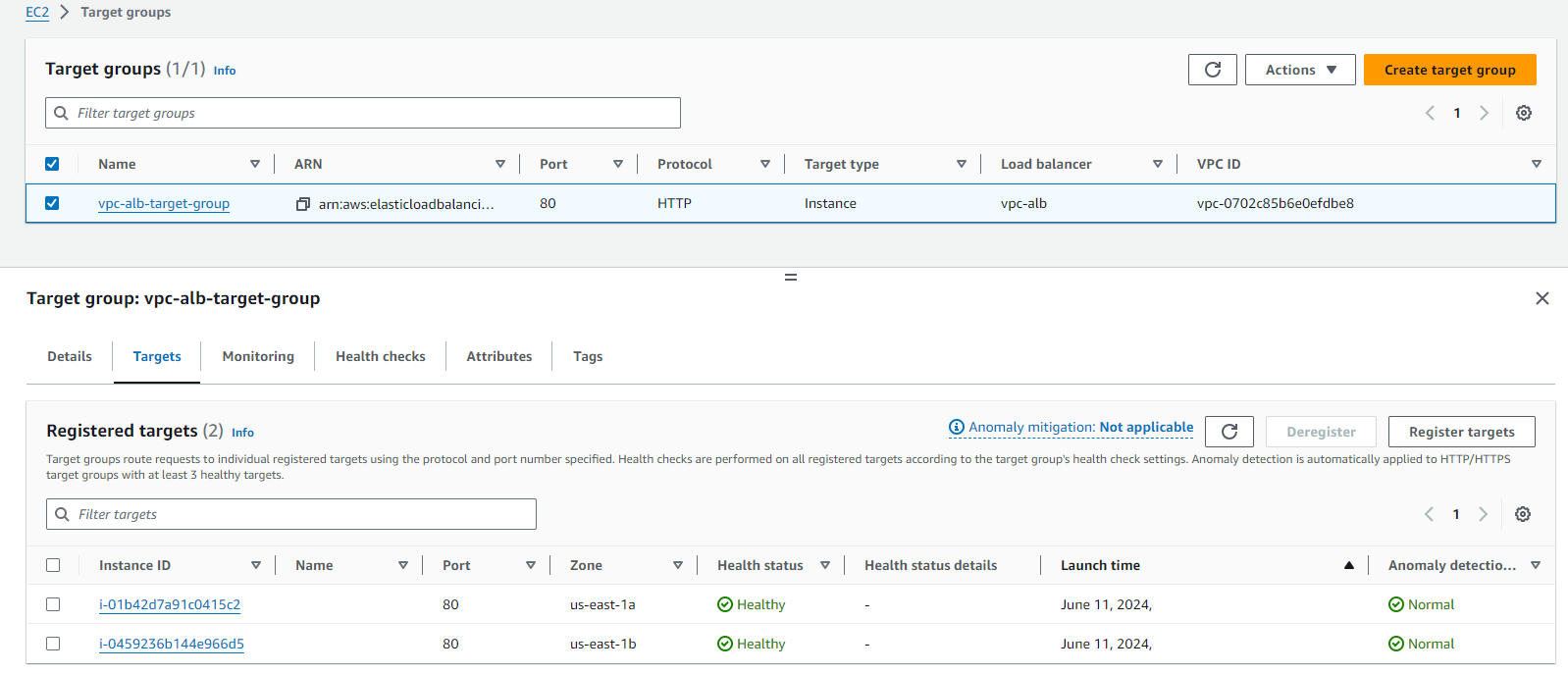

The ALB had a listener attached to listen for incoming connections on the specific port ID and also target groups to define how the ALB distributes traffic to the registered EC2 targets.

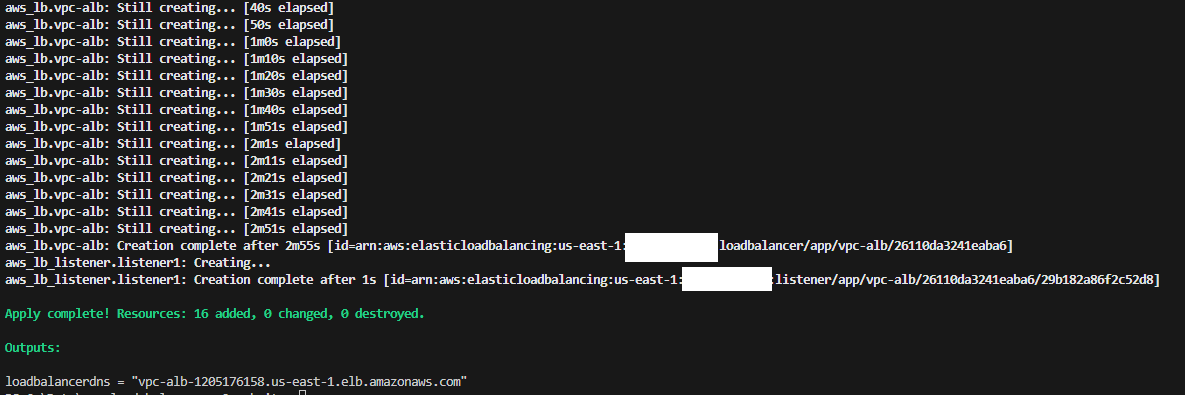

I used Terraform to deploy the entire environment and waited for it all to come online.

The Terraform Output identified the ALB DNS name which I used to access the websites that had been built. Each time I refreshed the website DNS name, the ALB rerouted traffic to the other instance demonstrating an even distribution of web traffic to serve the simple static website.

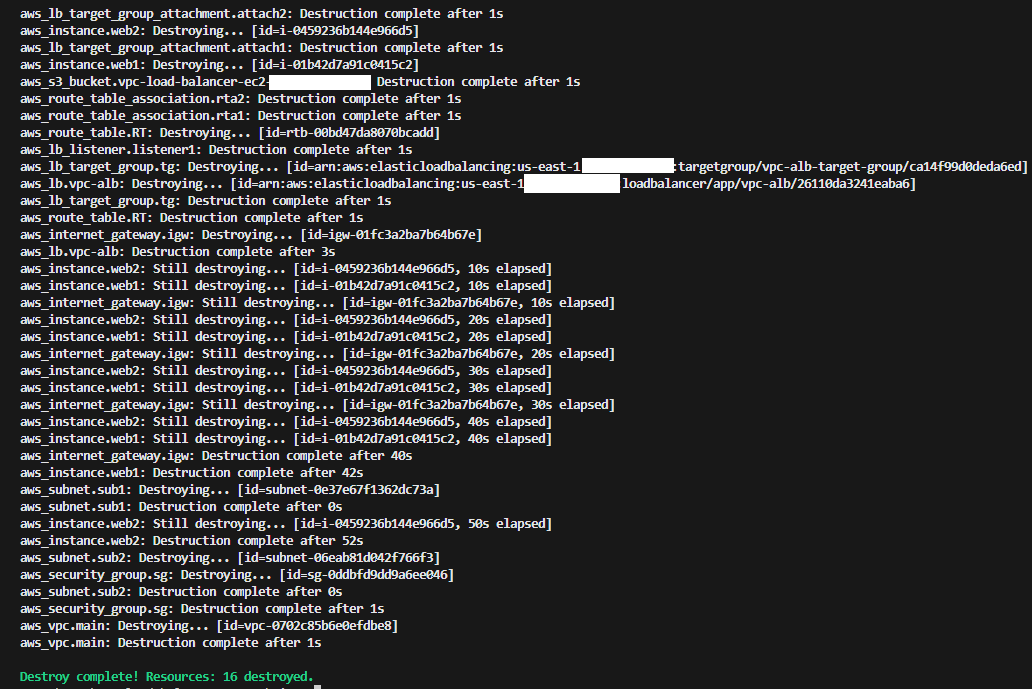

After documenting the steps and taking screenshots of everything I then used Terraform to tear down the environment!

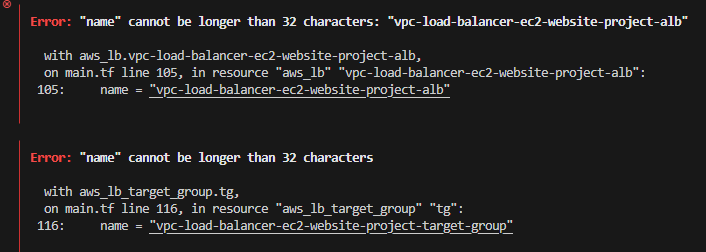

As is always the case with doing something new, I did hit one small snag during the deployment, which was this:

Terraform error:

Quite clearly, I had issued a ridiculously long name to the ALB and the ALB Target Group. Once I returned to the source config and reduced the name length, Terraform was happy and the environment was built out without issue.

Some of the highlights…

Terraform build:

Build complete:

VPC built:

Subnets:

Route Tables:

Security Groups:

S3 Bucket:

ALB:

ALB Resources:

ALB Target Groups:

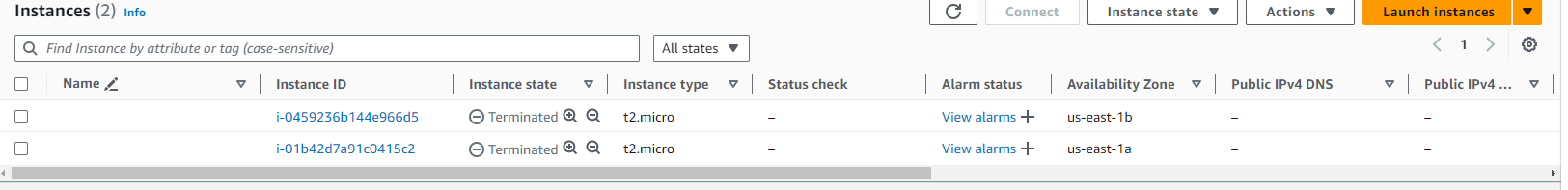

EC2 Instances:

EC2 serving website:

Terraform Cleanup:

Terminated EC2 Instances:

My interpretation of the architecture:

I hope you have enjoyed the article!