Building an EC2 instance, configured with Remote State Files in Terraform!

Journey: 📊 Community Builder 📊

Subject matter: Building on AWS

Task: Building an EC2 instance, configured with Remote State Files in Terraform!!

This week, I configured remote state files in Terraform and built an EC2 instance using Infrastructure as Code!

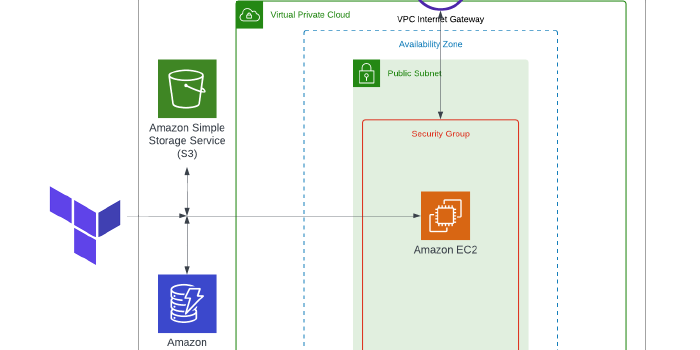

In this scenario, the state file is stored remotely in S3, and DynamoDB is utilised to lock the state during writes. This makes team collaboration possible as the file can only be written to by one contributor at a time.

The idea of this environment is to play with backend state locking, rather than create Highly Available architectures.

Resource credit: This architecture was created using guidance from Sahil Suri Here.

What did I use to build this environment?

- Visual Studio Code platform

- Terraform

- AWS CLI

- AWS Management Console

What is built?

- A single EC2 instance

- An S3 bucket

- A DynamoDB Table

- A remote state lock configuration

What I used to help:

https://docs.aws.amazon.com/AmazonS3/latest/API/API_CreateBucketConfiguration.html

https://medium.com/@vishal.sharma./create-an-aws-s3-bucket-using-aws-cli-5a19bc1fda79

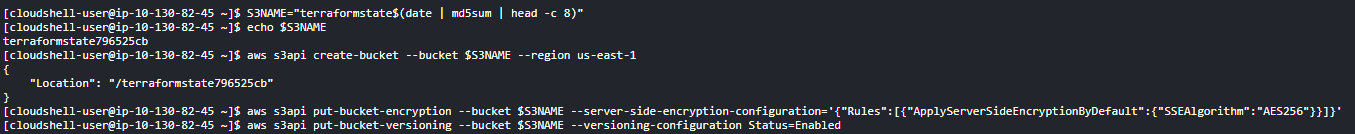

In this task, I used the AWS CLI to create an S3 bucket with server-side encryption and version control enabled.

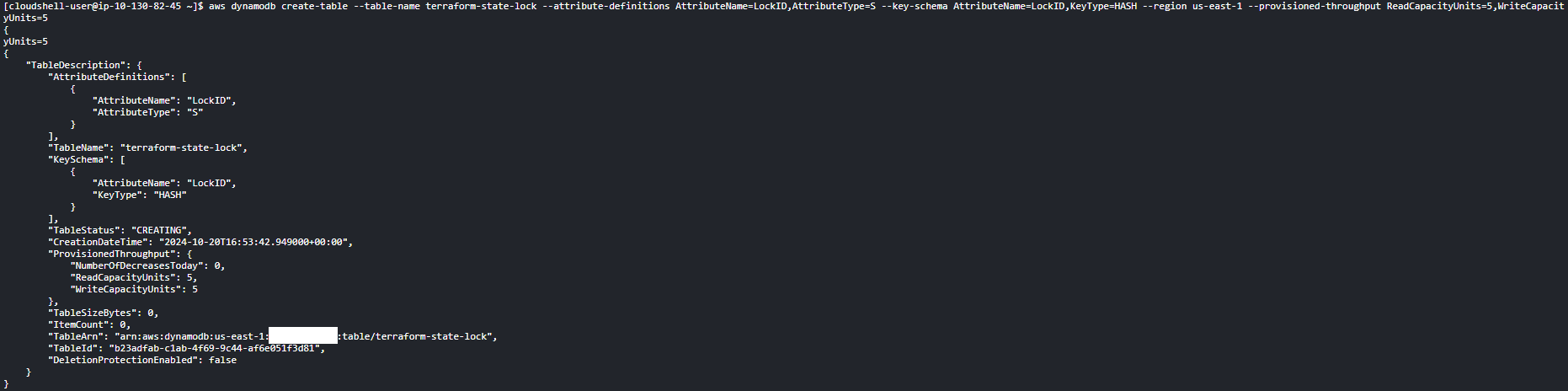

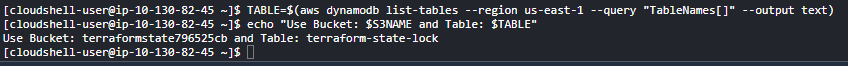

I then created a DynamoDB stable to control the state-locking process for the state file that would be stored in S3.

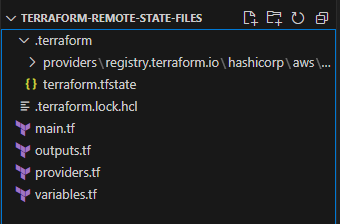

After these two services were created, I moved to VSCode and created my Terraform file structure. I made some changes here as I wanted to hold the AWS provider in its own Providers.tf file.

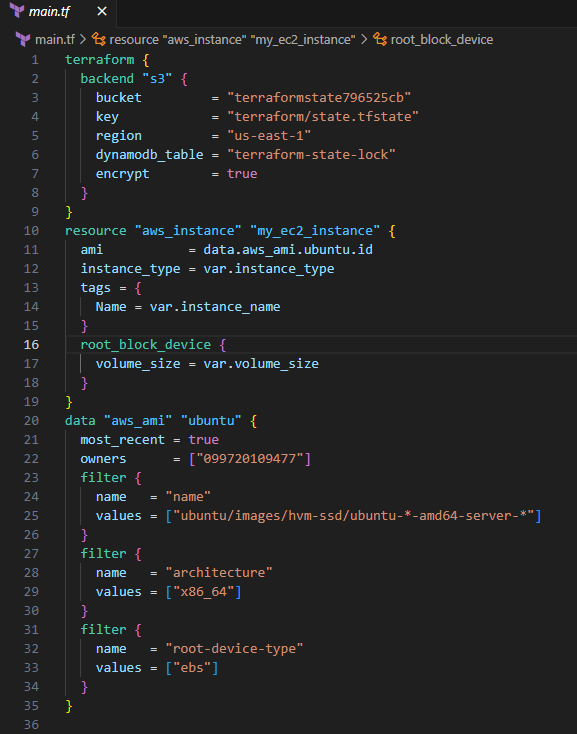

I configured my backend state to point to my new S3 bucket and DynamoDB Table before initiating and creating a plan.

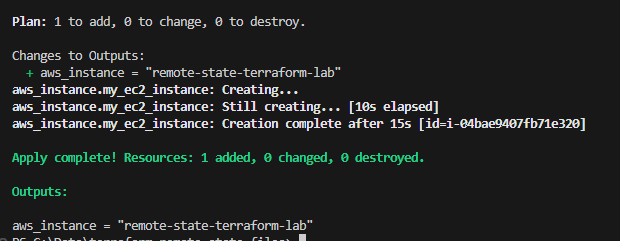

Once I had confirmed all was looking good, I performed the Terraform apply and waited for my mini environment to come online. During the creation, my state file was created in S3 and locked during writes by DynamoDB.

After I had finished my activities, I used Terraform to destroy the instance and then emptied and deleted my S3 bucket and DynamoDB Table.

If I were to expand best practice on this project, I would do the following:

- Build my S3 bucket and DynamoDB table via Terraform rather than manually on the CLI.

Some of the highlights…

S3 creation:

Database table creation:

Outputs:

Terraform structure:

Main.tf:

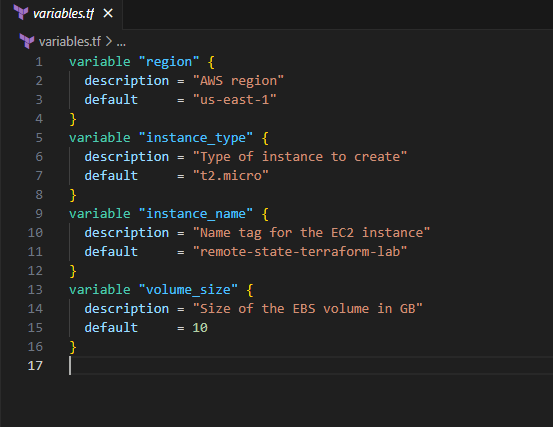

Variables.tf

Terraform creation:

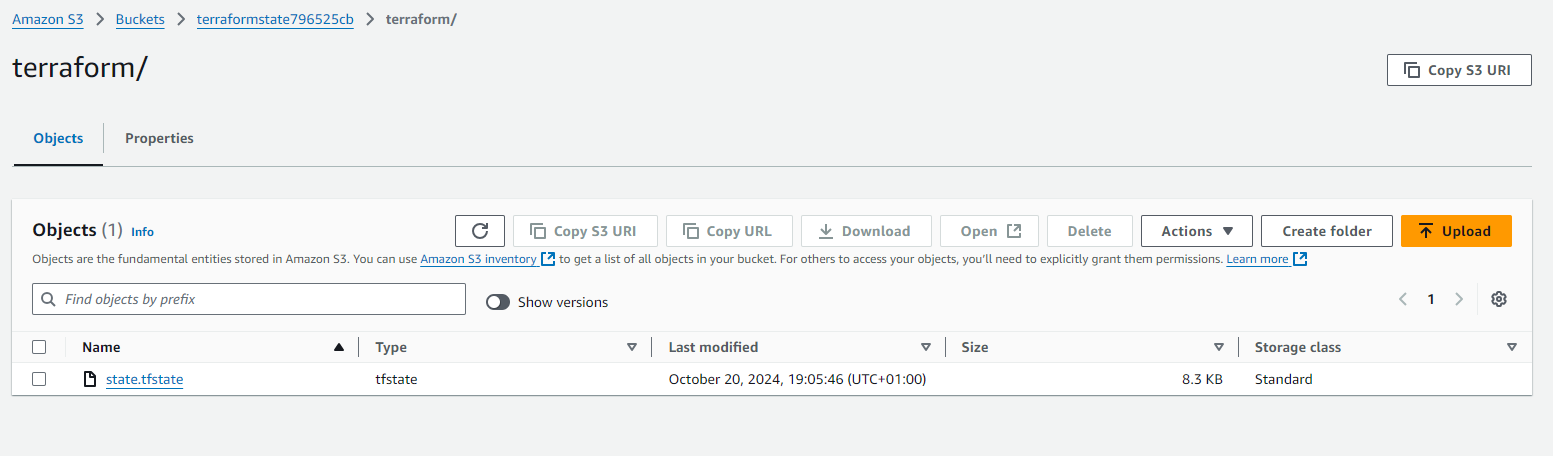

S3 state lock file:

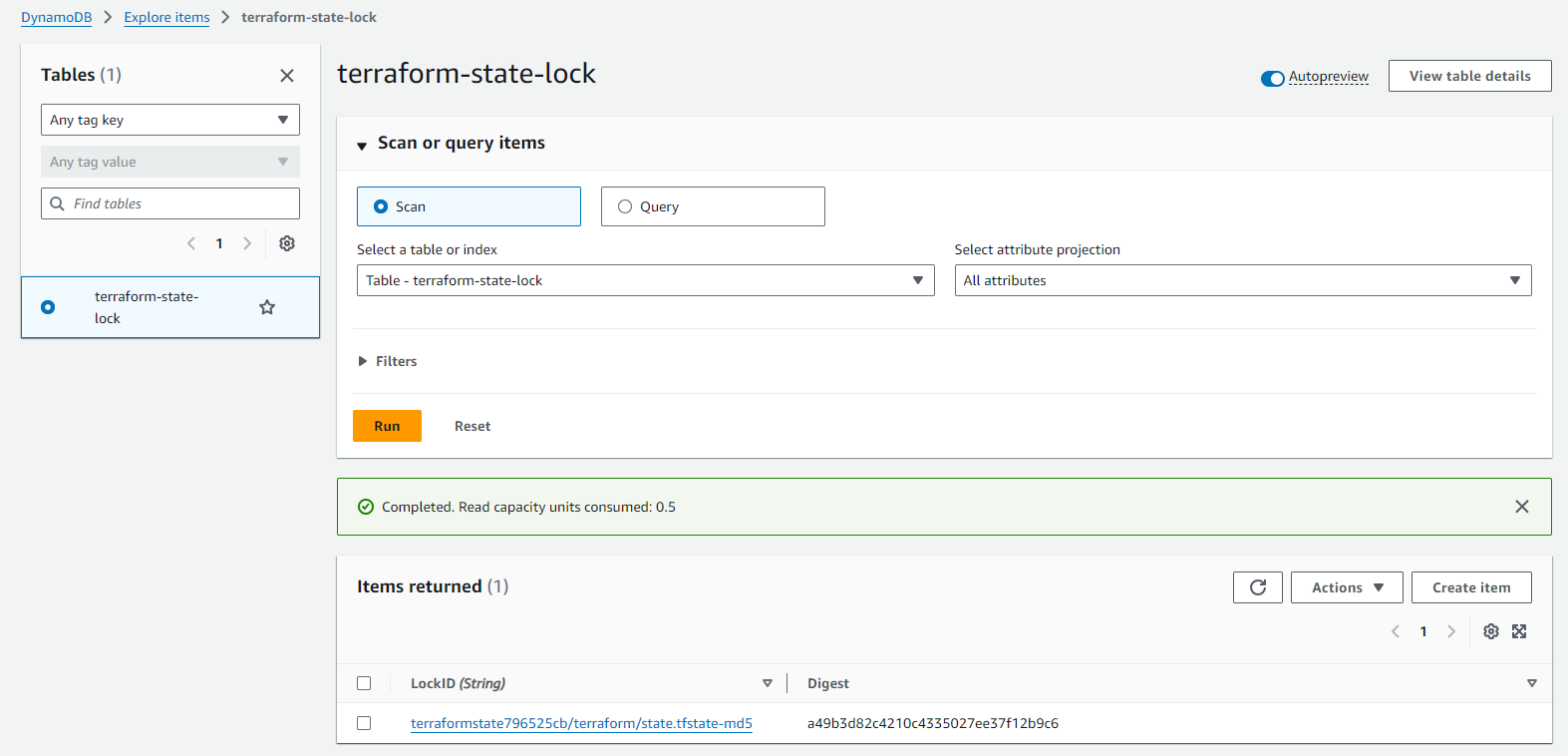

Database state lock table:

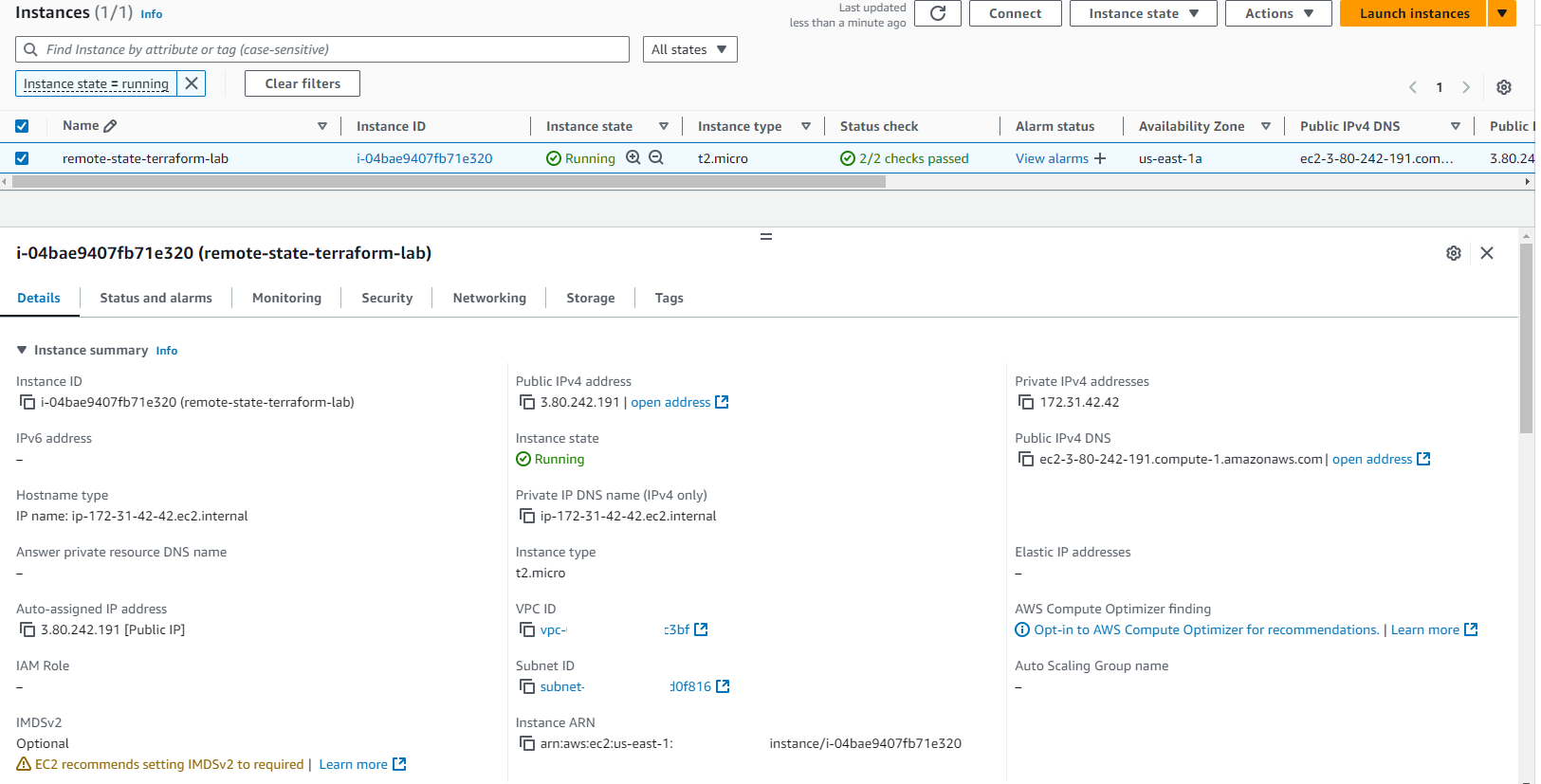

EC2 instance:

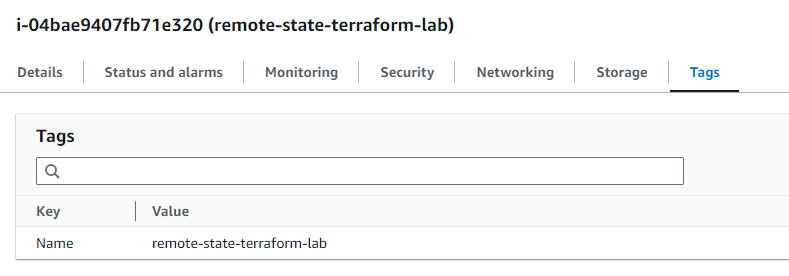

EC2 tags:

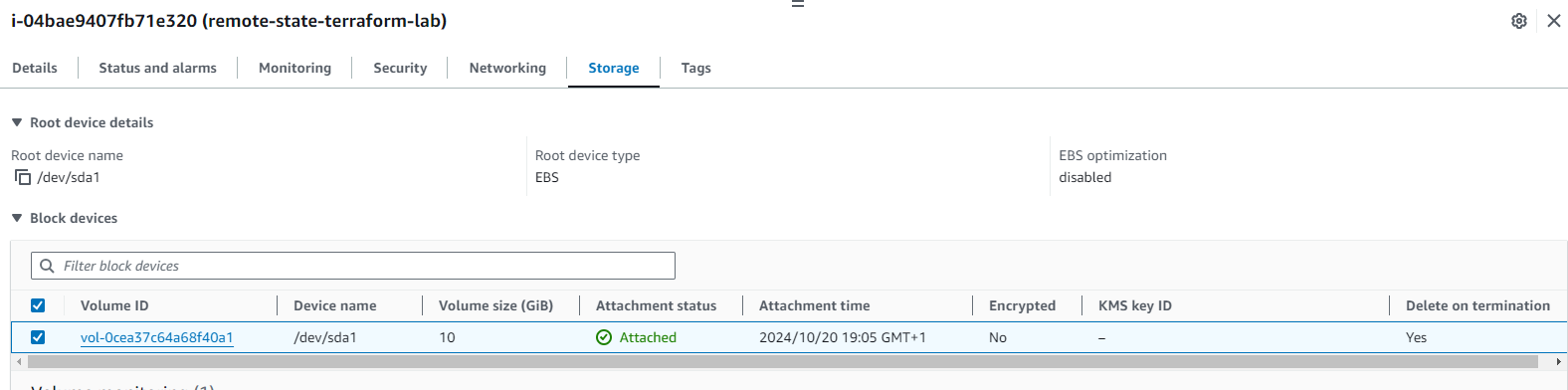

EC2 volume:

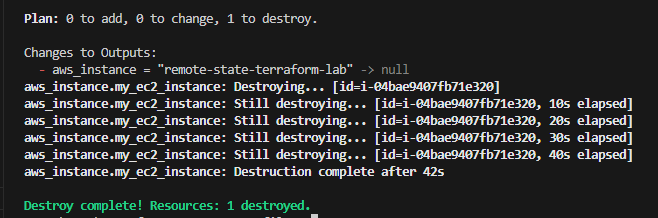

Terraform destroy:

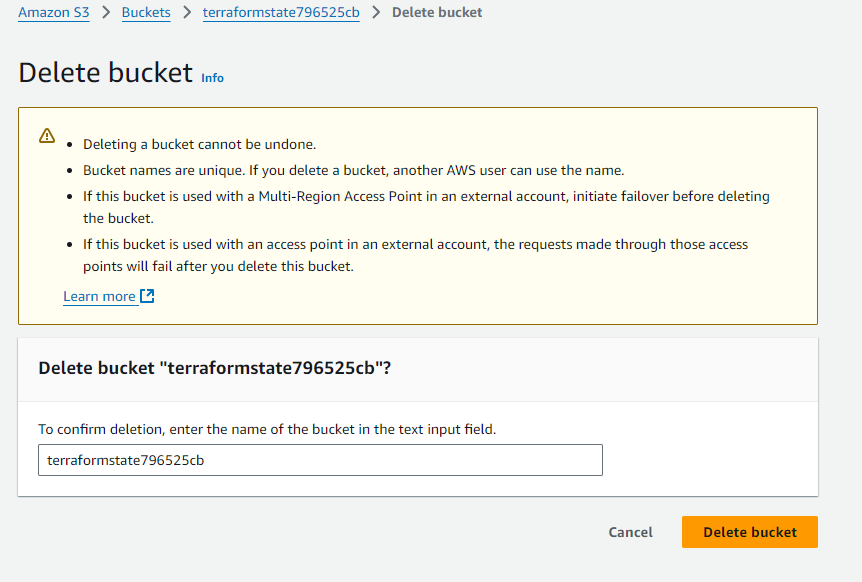

S3 empty and delete:

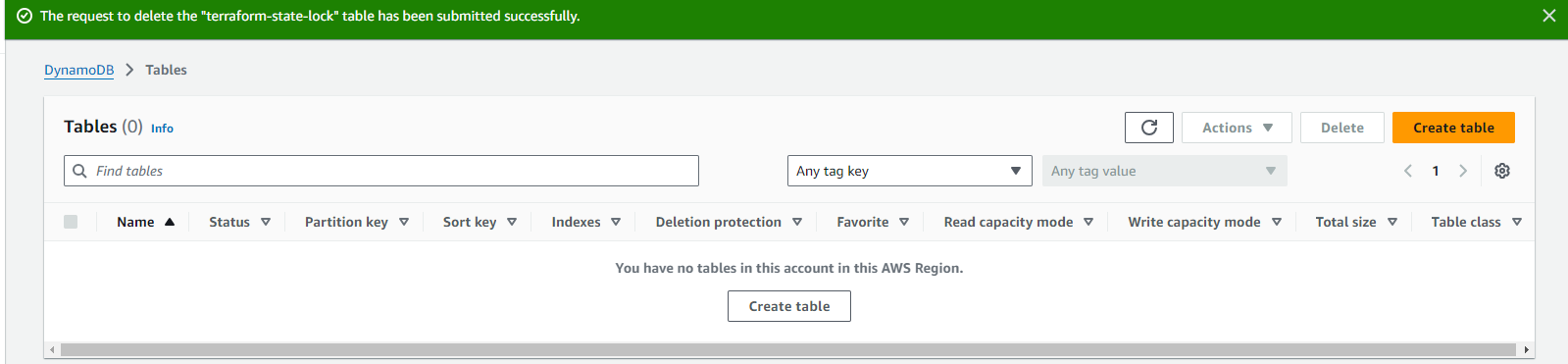

Database table deletion:

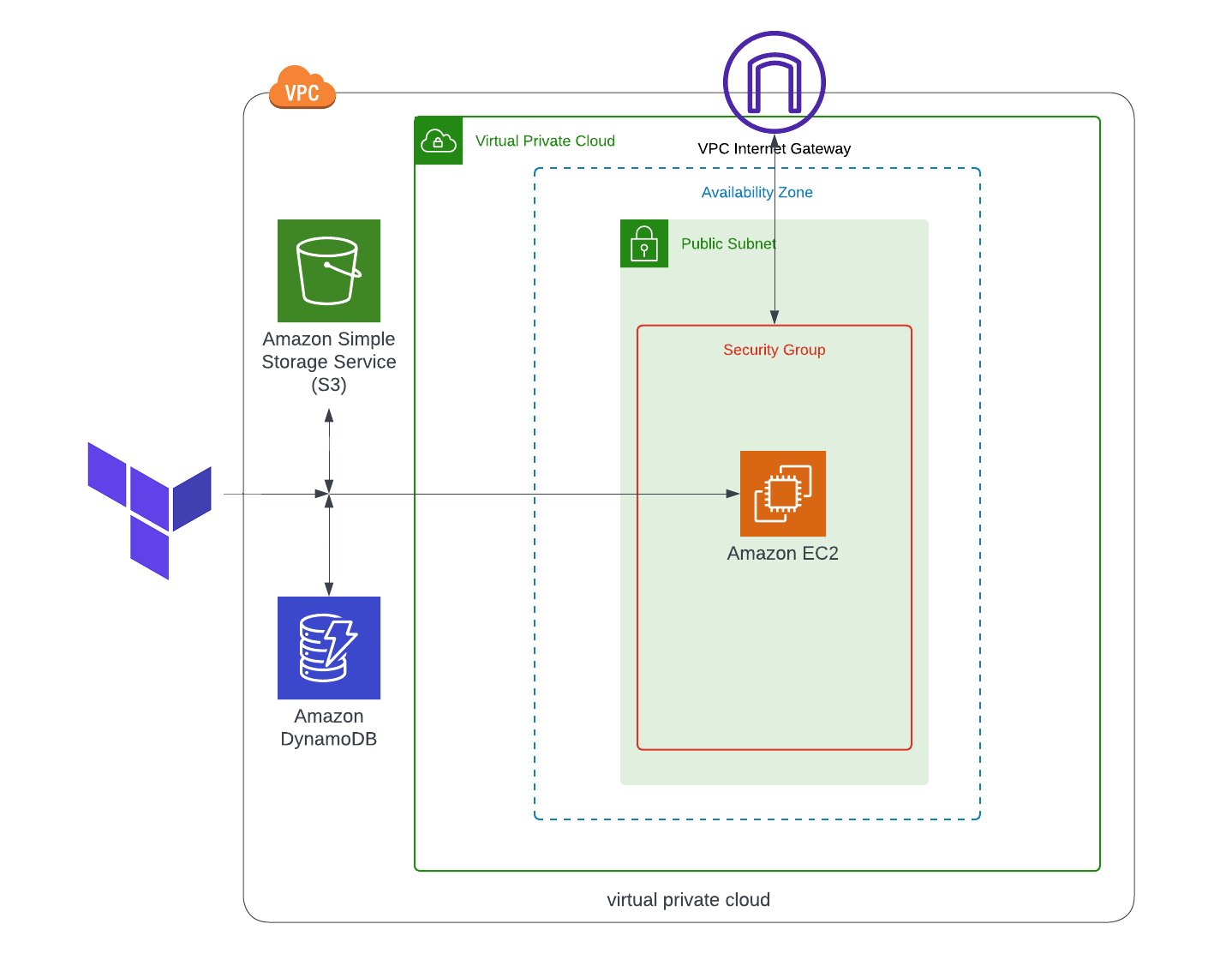

My interpretation of the architecture:

I hope you have enjoyed the article!