Building an automated data transfer architecture using S3 and Lambda!

Journey: 📊 Community Builder 📊

Subject matter: Building on AWS

Task: Building an automated data transfer architecture using S3 and Lambda!

Using the 6 Pillars of the AWS Well-Architected Framework, Security, Performance Efficiency, Reliability, and Sustainability will be achieved in this build.

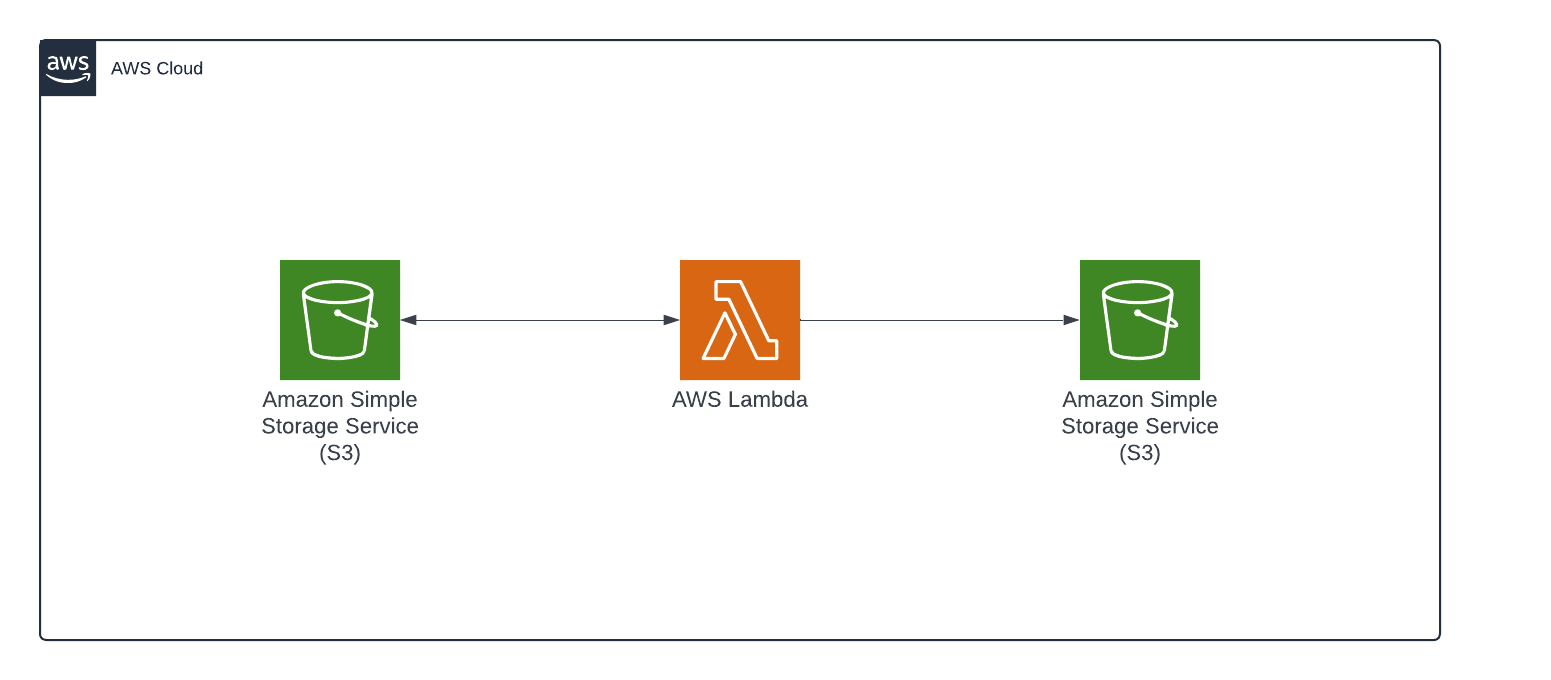

This week, I built an automated data transfer architecture using S3 and Lambda.

The idea for this project was that while files can be initially uploaded to one location, you may not want to keep them there. For example, one data vendor uploads files to their customer’s S3 bucket. However, the customer cannot utilise the data in that location so they move it to where their pipeline sits.

In this scenario, we will be uploading a file to one S3 bucket and then triggering Lambda to transfer the file to a new S3 bucket before deleting the original file in the source location.

Resource credit: This architecture was created using guidance from Wojciech Lepczyński Here.

What did I use to build this environment?

- AWS Management Console

- IAM

What is built?

- IAM Policy

- IAM Role

- Two Amazon S3 buckets

- An AWS Lambda Function

I also used the following pages while planning this environment:

https://docs.aws.amazon.com/lambda/latest/dg/with-s3-example.html

https://interworks.com/blog/2023/02/28/moving-objects-between-s3-buckets-via-aws-lambda/

https://cto.ai/blog/copy-aws-s3-files-between-buckets/

In this task, I built a short event-driven pipeline.

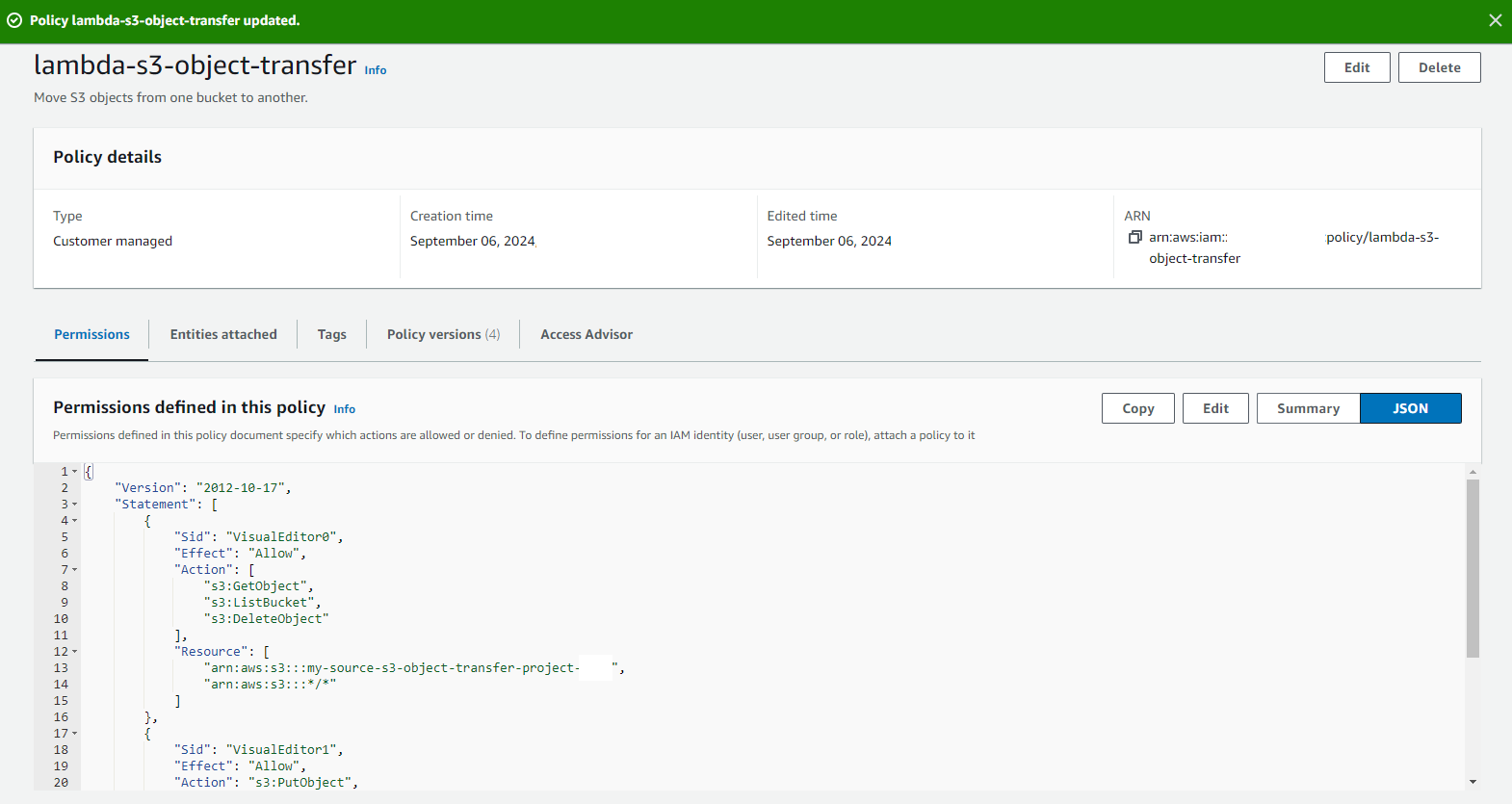

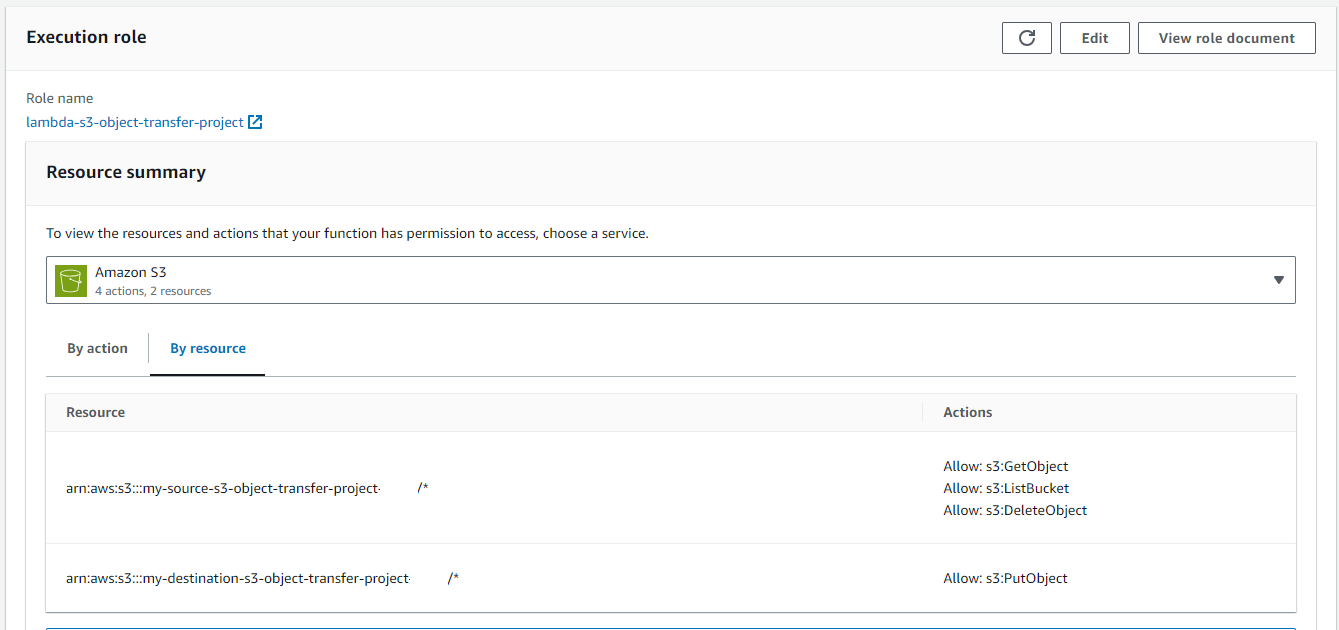

On the principle of least privileged access, I created a new IAM policy to permit my Lambda function to List, Get, and Delete my source S3 bucket and to be able to perform a Put on my destination S3 bucket.

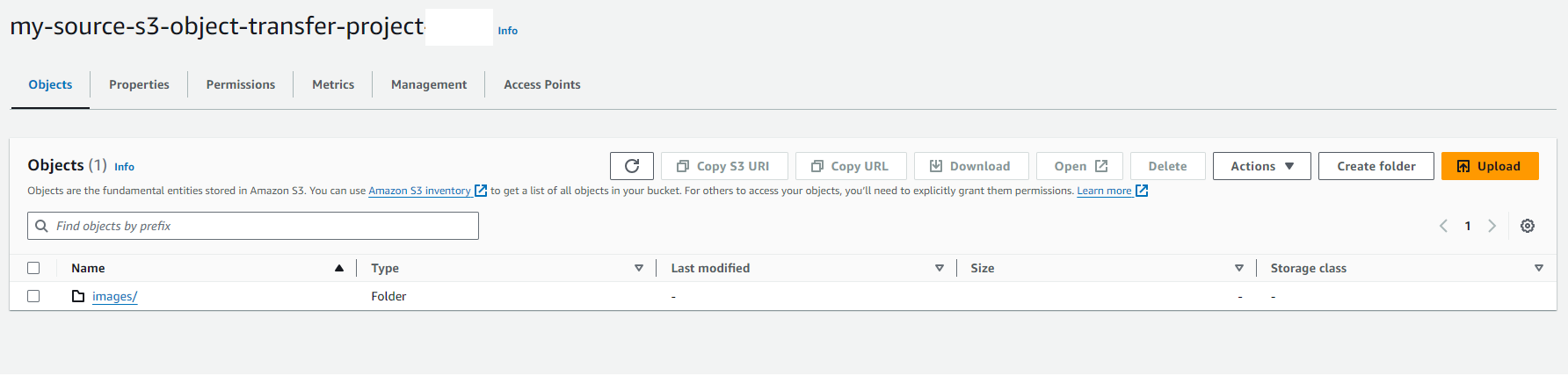

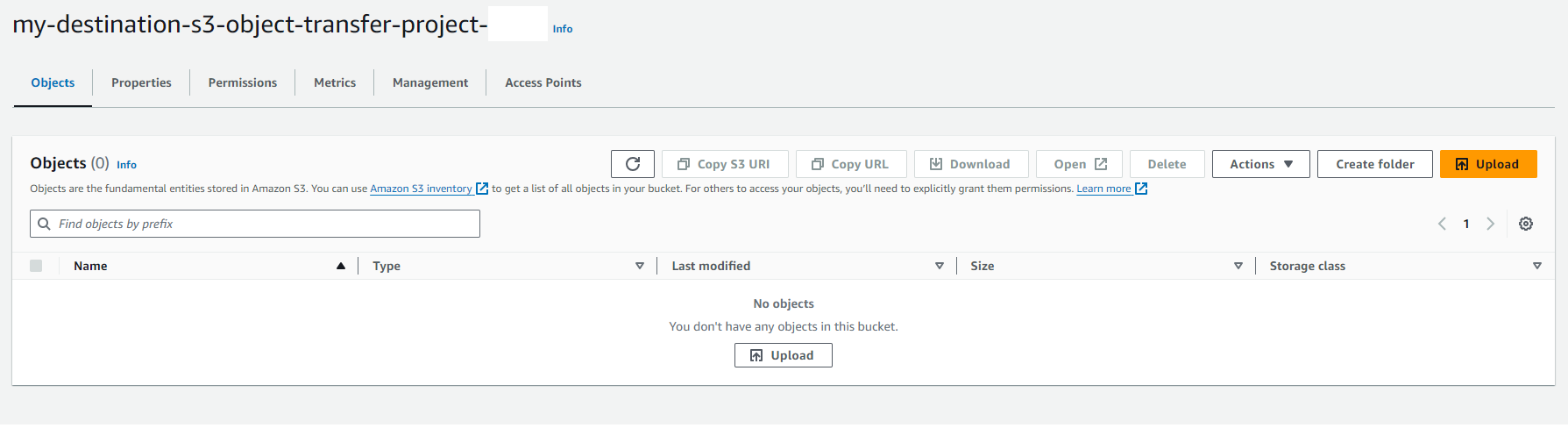

I then created two S3 buckets, one acting as the source and the other as the destination. The Source bucket had an ‘images’ folder created.

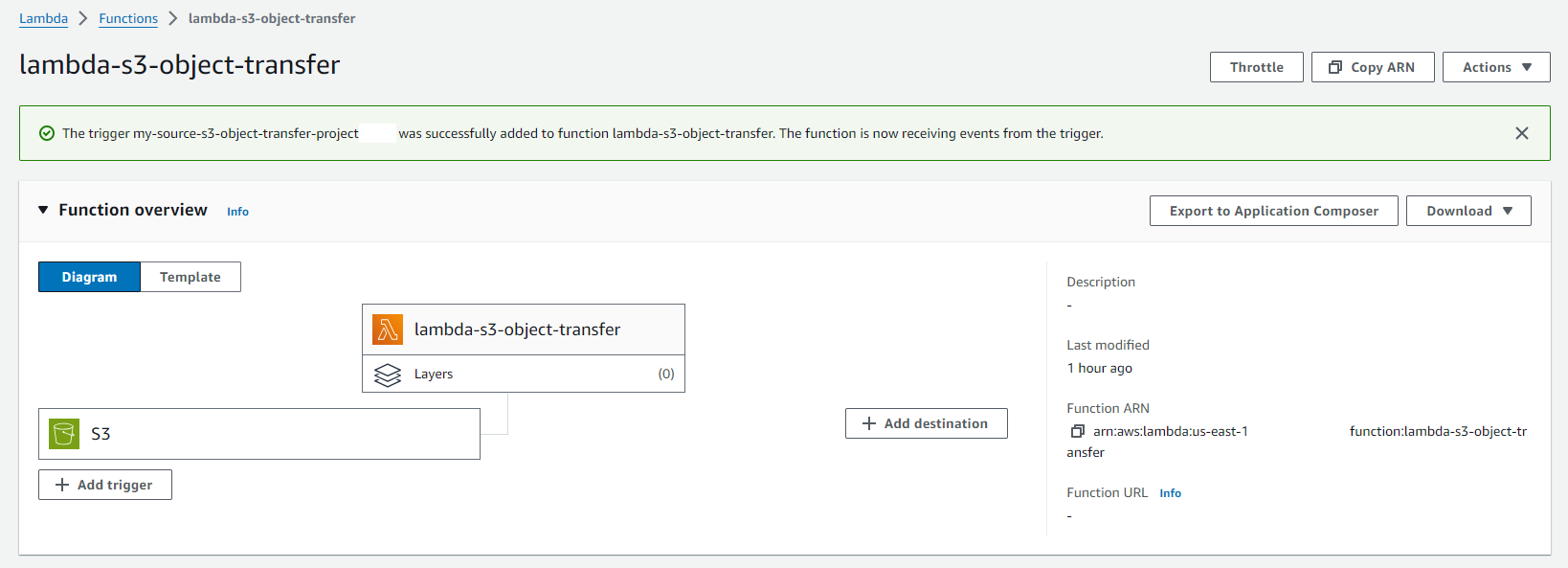

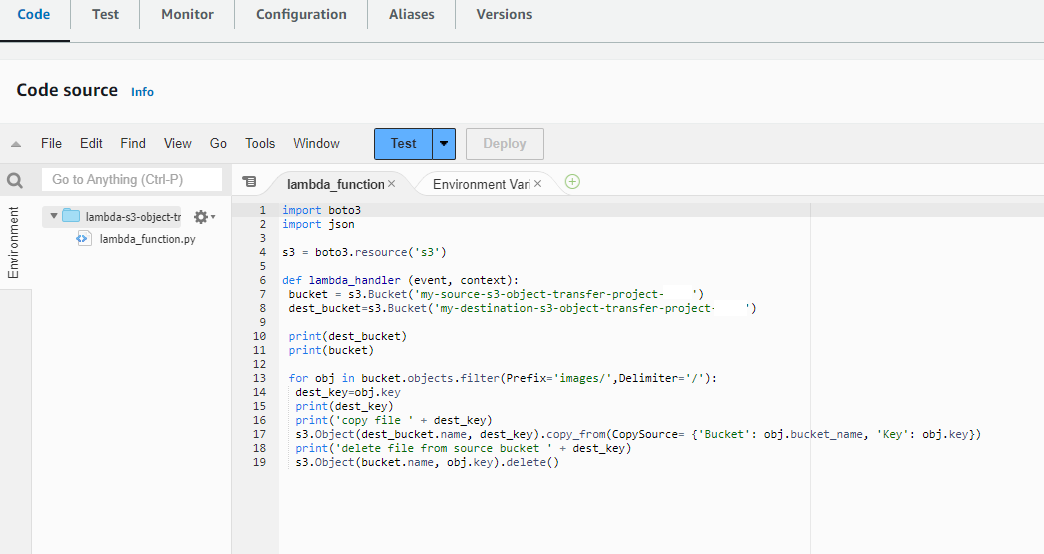

Once this was in place I created a new function in Lambda, attached the new Role, added the required code, and Deployed it.

At this point, I was able to test my function to see if it performed the expected action when triggered manually. I uploaded an image of a cup of coffee [Coffee.jpg] to my source S3 bucket and then performed a test.

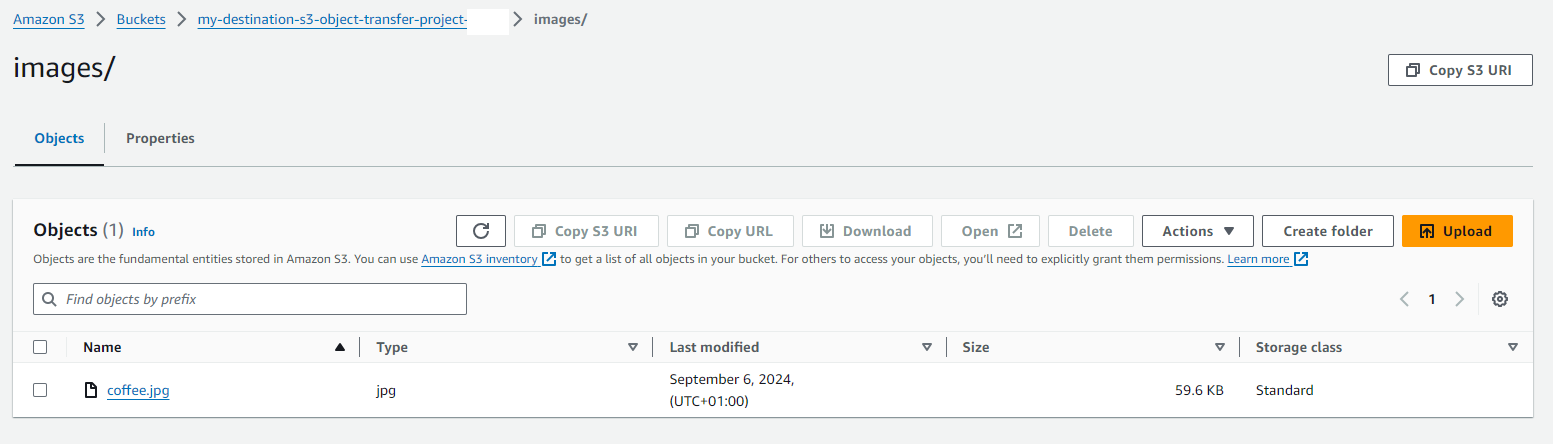

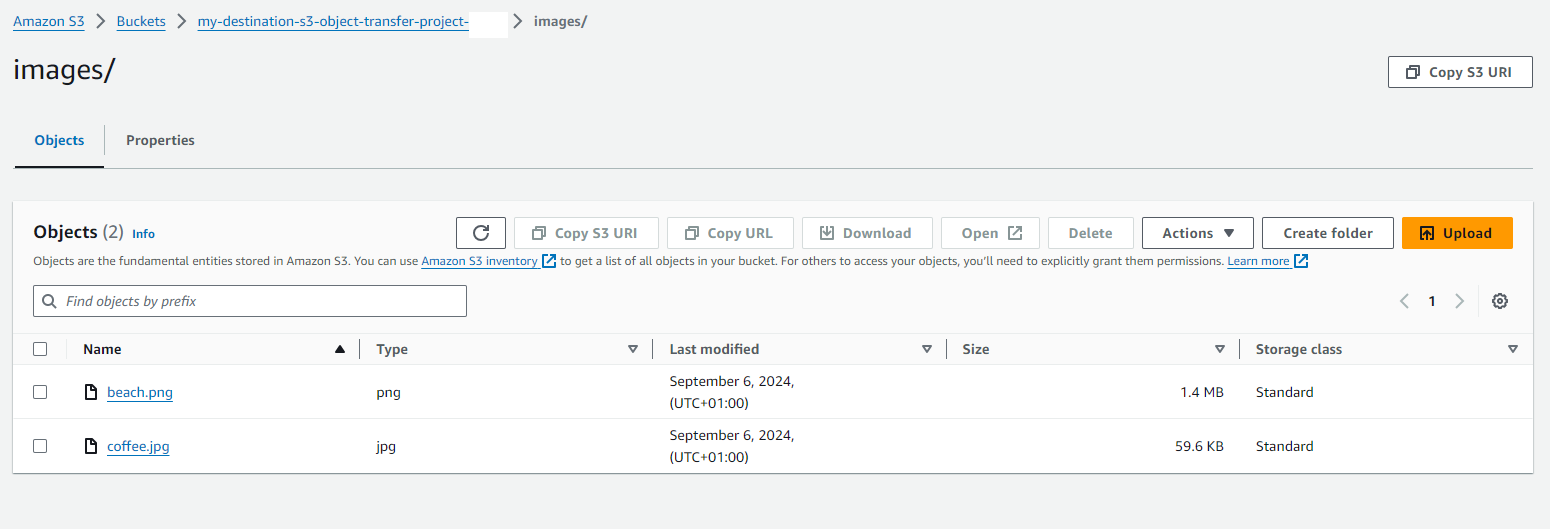

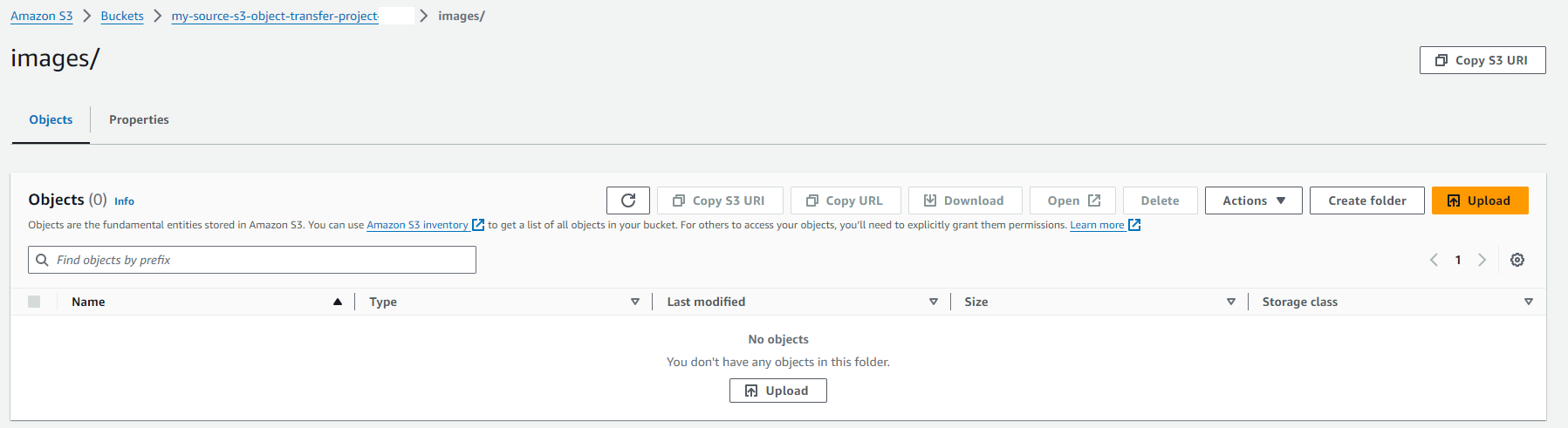

This succeeded! Coffee.jpg was copied from the source bucket to the destination bucket and then deleted from the source!

Now it was time to add the automation steps.

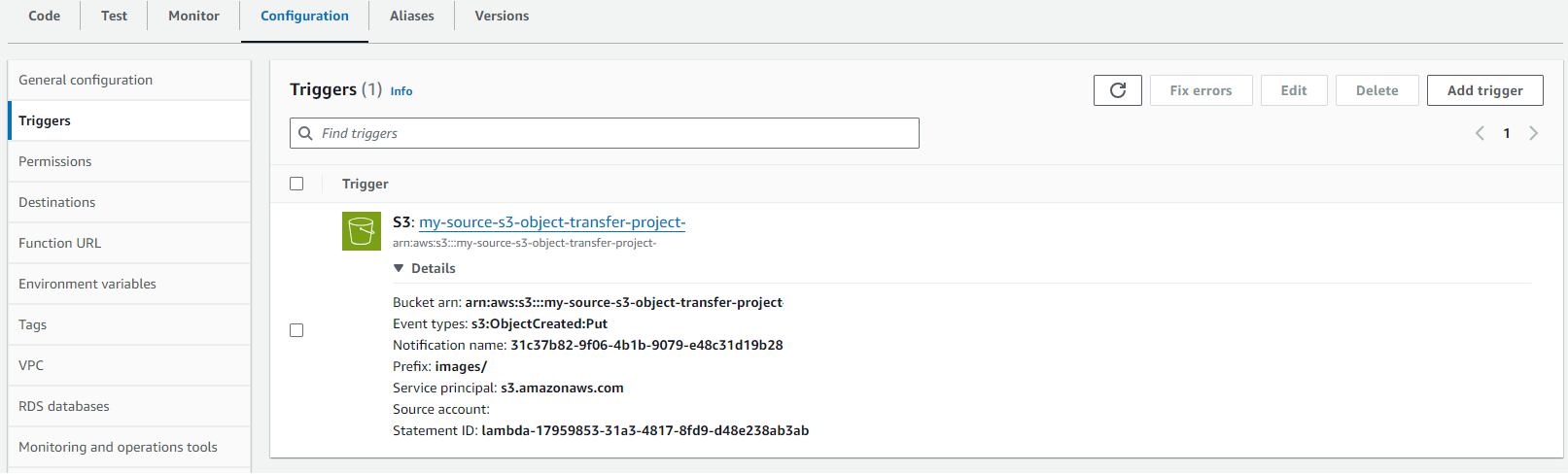

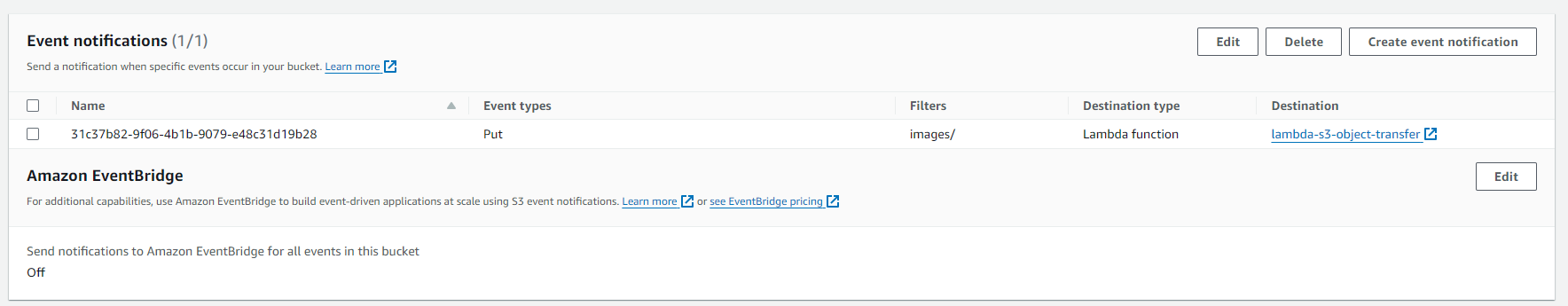

I introduced a trigger to Lambda, which was configured to fire against PUT requests in the ‘images’ folder of my source S3 bucket.

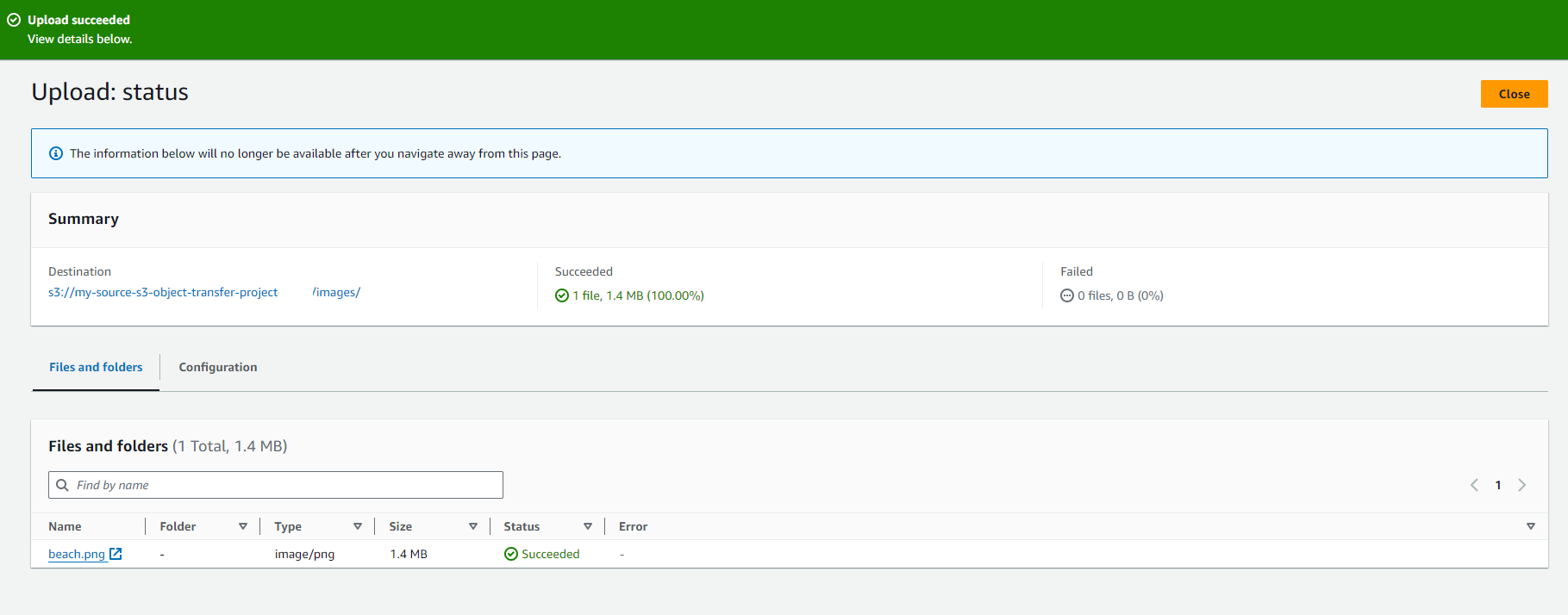

This time when I uploaded an image of a beach [Beach.png] into my source S3 bucket, it was automatically consumed by Lambda, which copied the object to the destination S3 bucket, and then deleted from the source S3 bucket.

Result!! A working automation pipeline!!

This serverless event-driven architecture demonstrates a scalable and resilient application scope with only a few AWS products and services.

I also fancy drinking a coffee while sitting on a beach too!

Some of the highlights…

New IAM Policy:

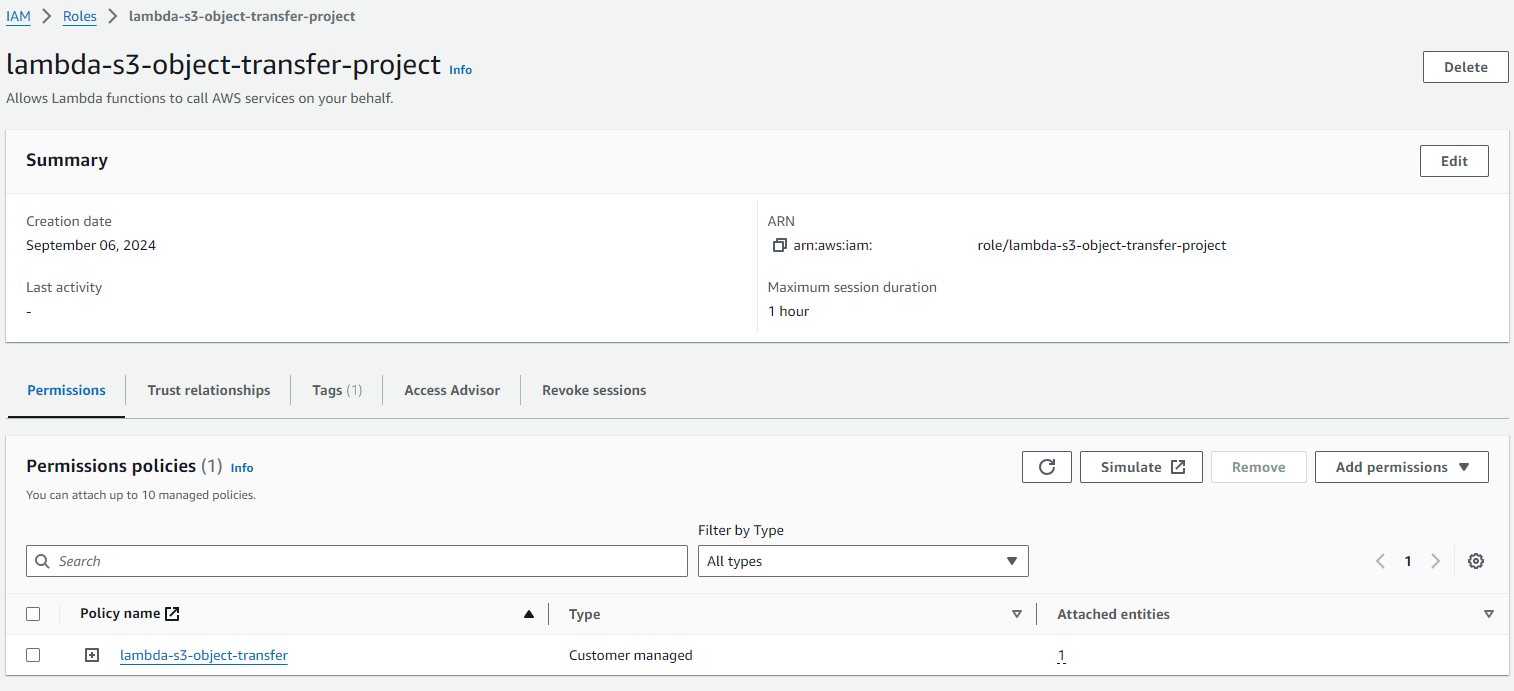

New IAM Role:

Source data S3 bucket:

Destination data S3 bucket:

Lambda Function:

Lambda role:

Function Code:

I uploaded a Coffee image to my source location and then manually triggered the Lambda code:

The manual test was triggered:

Transfer successful:

Automation trigger configured:

S3 event trigger in place:

New ‘Beach’ file uploaded:

Destination S3 bucket successfully received Beach image object:

Source S3 bucket file automatically removed:

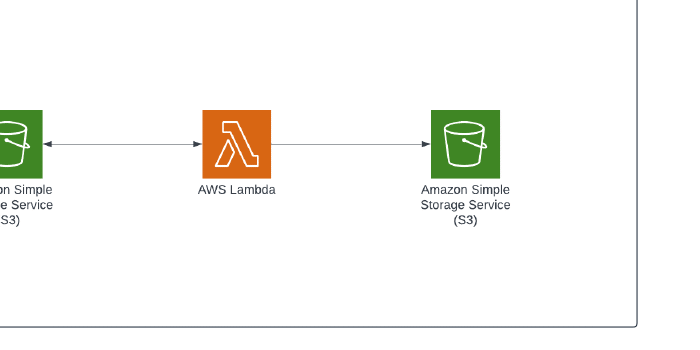

My interpretation of the architecture:

I hope you have enjoyed the article!