Building an EKS cluster and mounting an S3 bucket within a pod using the CSI Driver

Journey: 📊 Community Builder 📊

Subject matter: Building on AWS

Task: Building an EKS cluster and mounting an S3 bucket within a pod using the CSI Driver!

This week, I used CloudFormation to build out an EKS Cluster and then mount an S3 bucket into a pod using the Container Storage Interface (CSI).

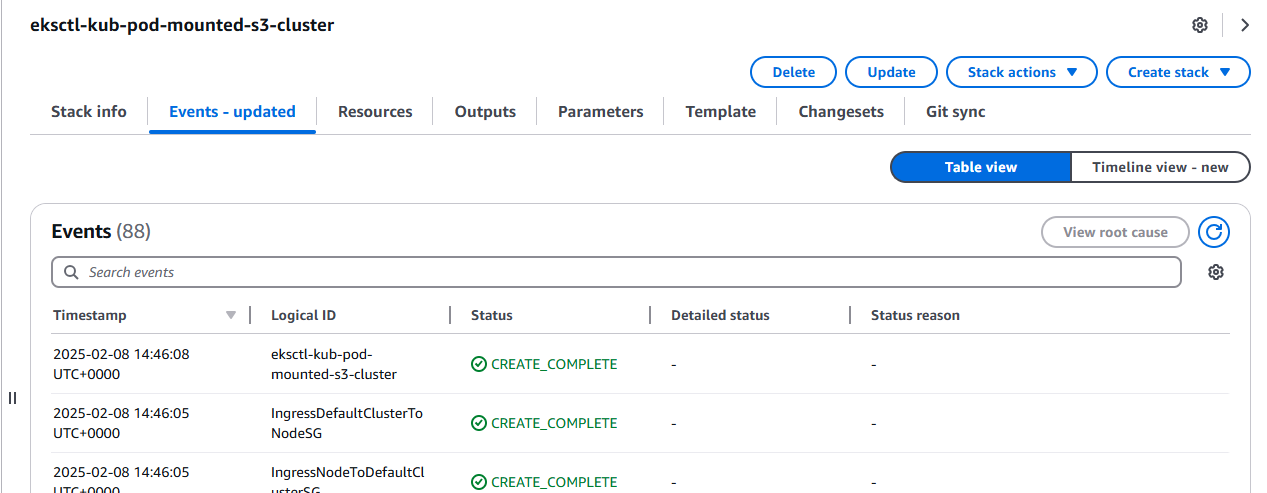

In this scenario, I used the CLI via VScode to create my cluster with CloudFormation keeping me updated with build progress across the various stacks that were generated.

Resource credit: This architecture was created using guidance from Ahmed Badur Here.

What did I use to build this environment?

- Visual Studio Code platform.

- AWS CLI.

- Kubectl CLI.

- Eksctl CLI.

- AWS CloudFormation.

- AWS Management Console.

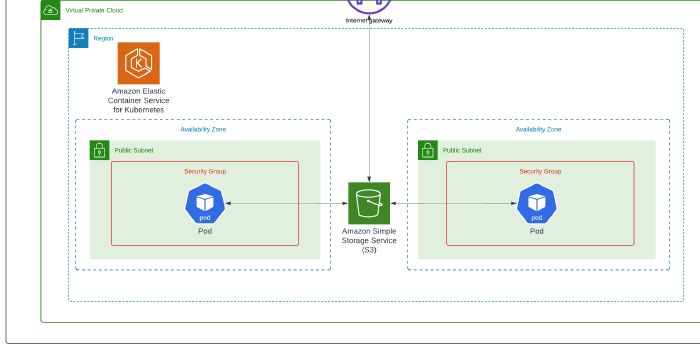

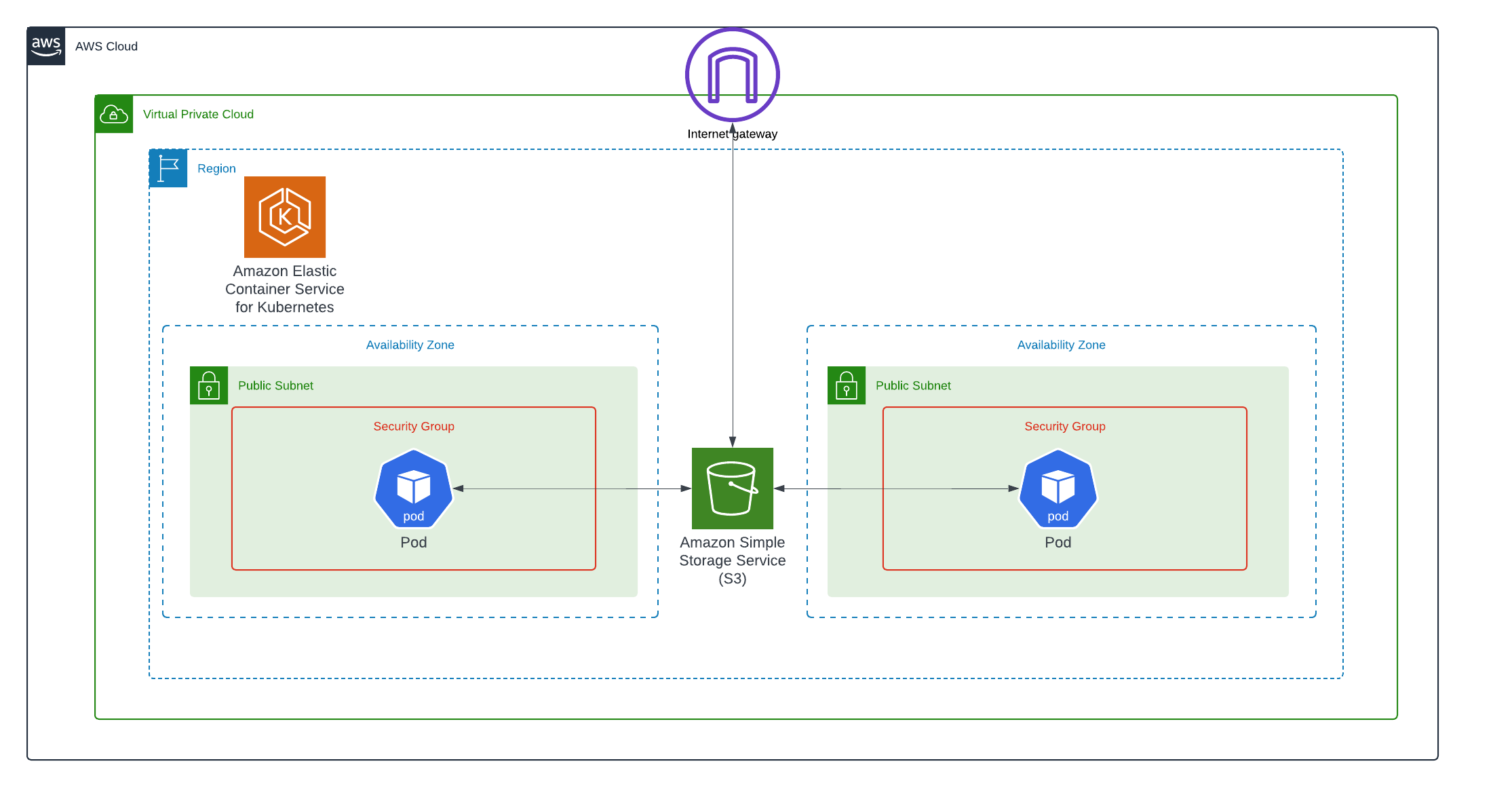

What is built?

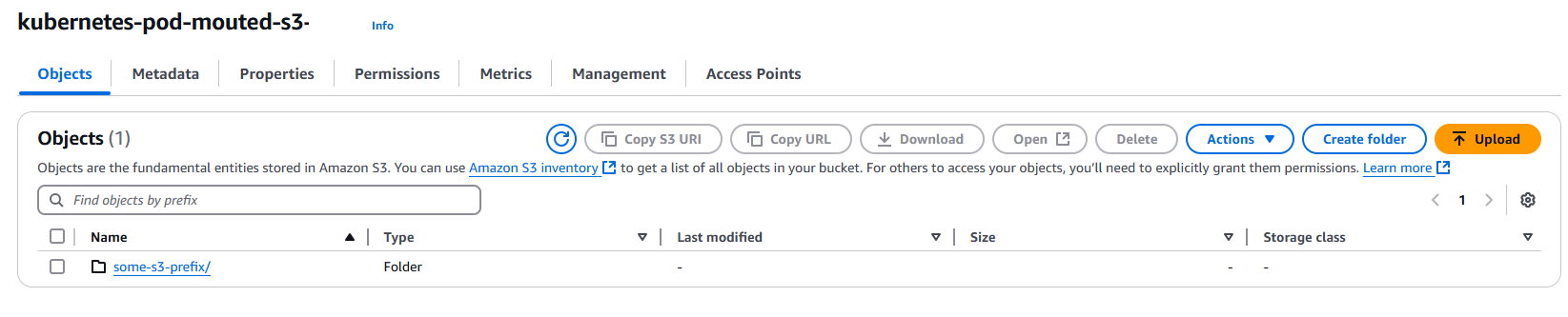

- An S3 bucket.

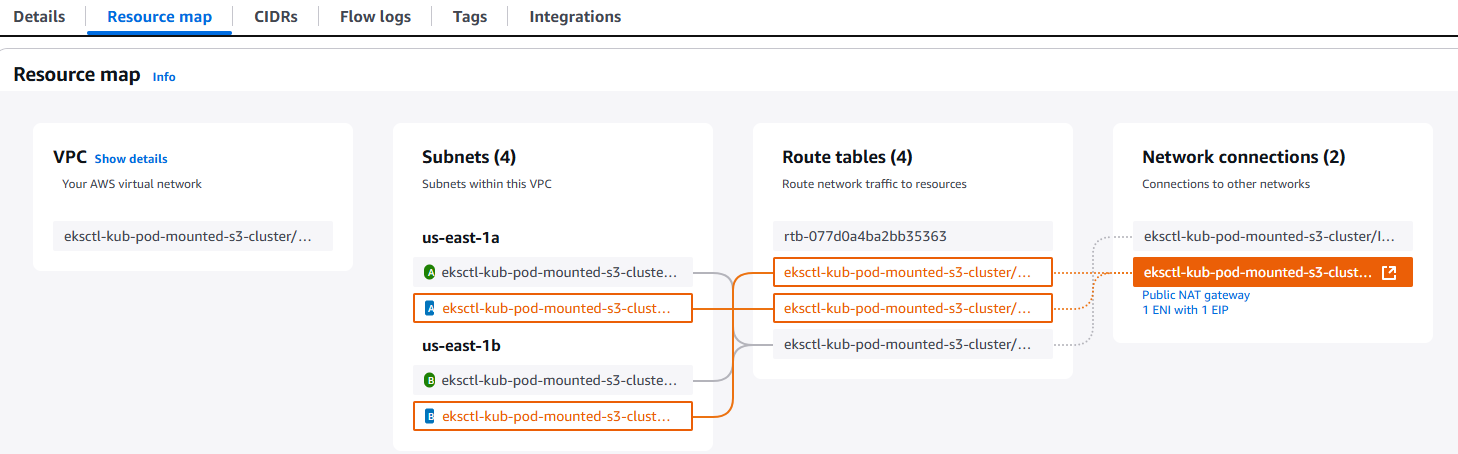

- A single VPC.

- Dual Availability Zones.

- Public Subnets.

- Custom Route Tables.

- Internet and NAT Gateway.

- NACLs and Security Groups.

- An Amazon EKS Cluster.

- Amazon S3 CSI Driver.

- IAM OIDC Approver.

In this task, I integrated S3 with a Kubernetes pod using the S3 CSI driver, effectively making S3 a file system within Kubernetes.

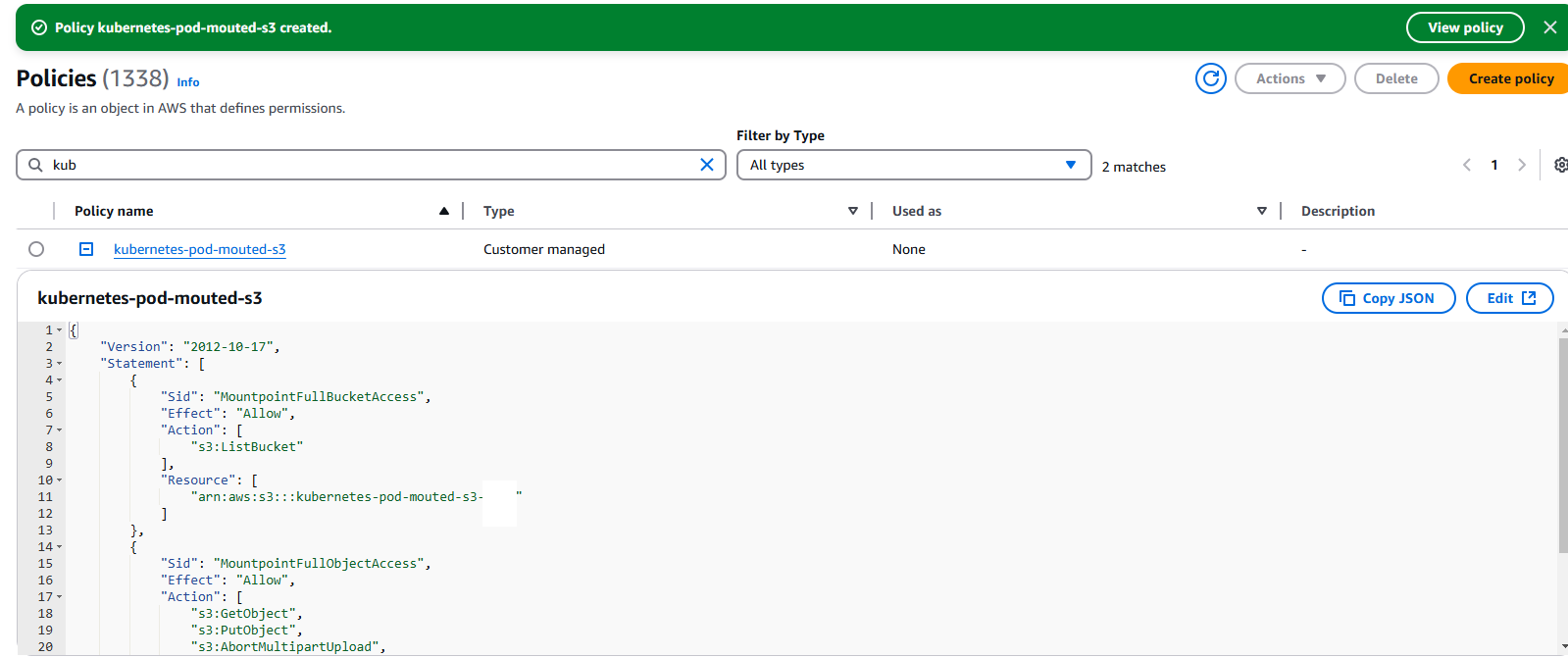

First, I created a new policy in IAM, then created a new EKS cluster and associated it with an OpenID Connect (OICD) provider.

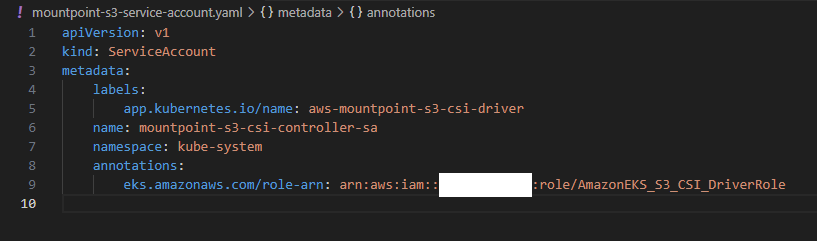

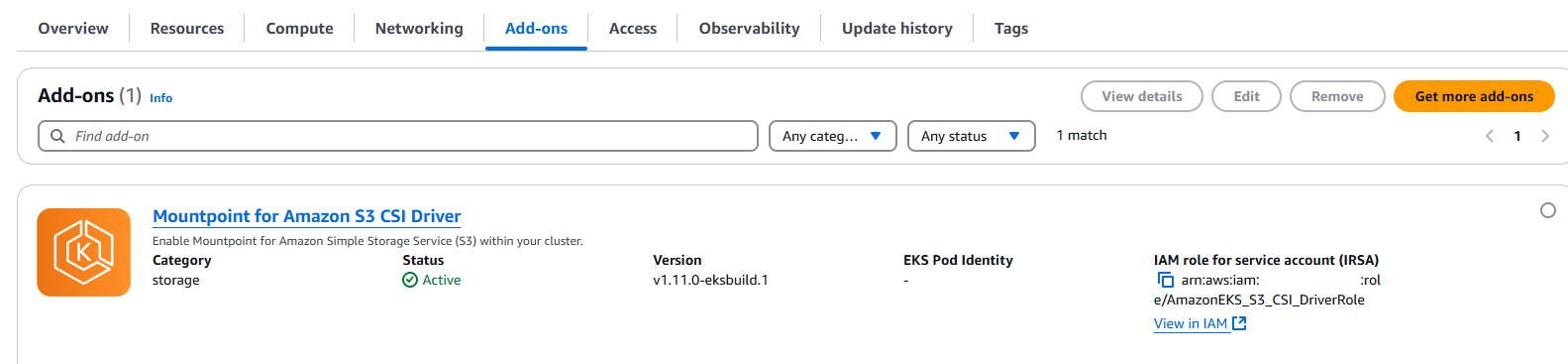

After checking the OIDC connection was approved, I created a service account in IAM. Once this was in place, it was time to load the mount point S3 CSI driver and associate it to the new IAM service account.

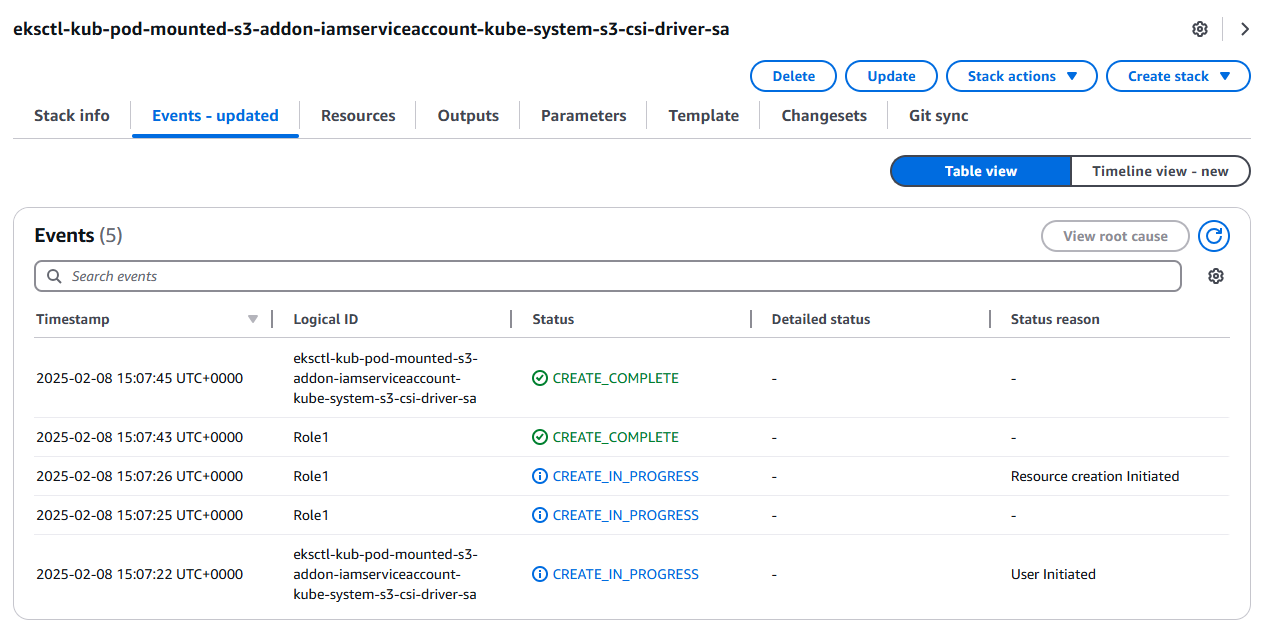

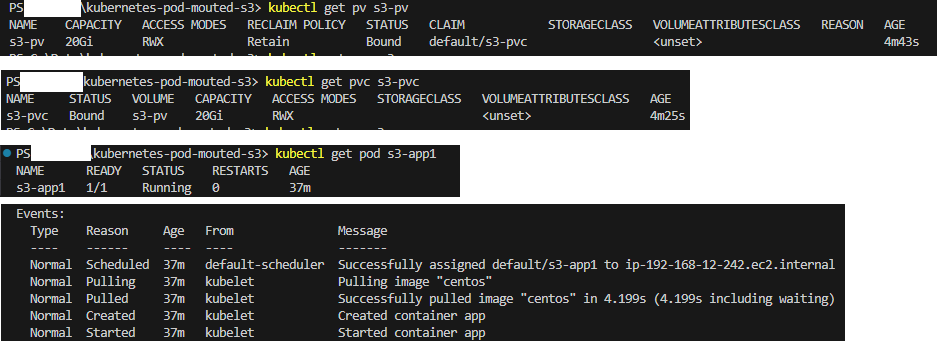

I added the mount point into the new EKS cluster and then used CloudFormation again to bring the Kubernetes pod online.

I did hit some snags though, literally right at the last step when my pod failed to come online. I am planning on documenting how I troubleshooted the issue next and will share that fix on my blog in the hope it helps others who run into the same issue.

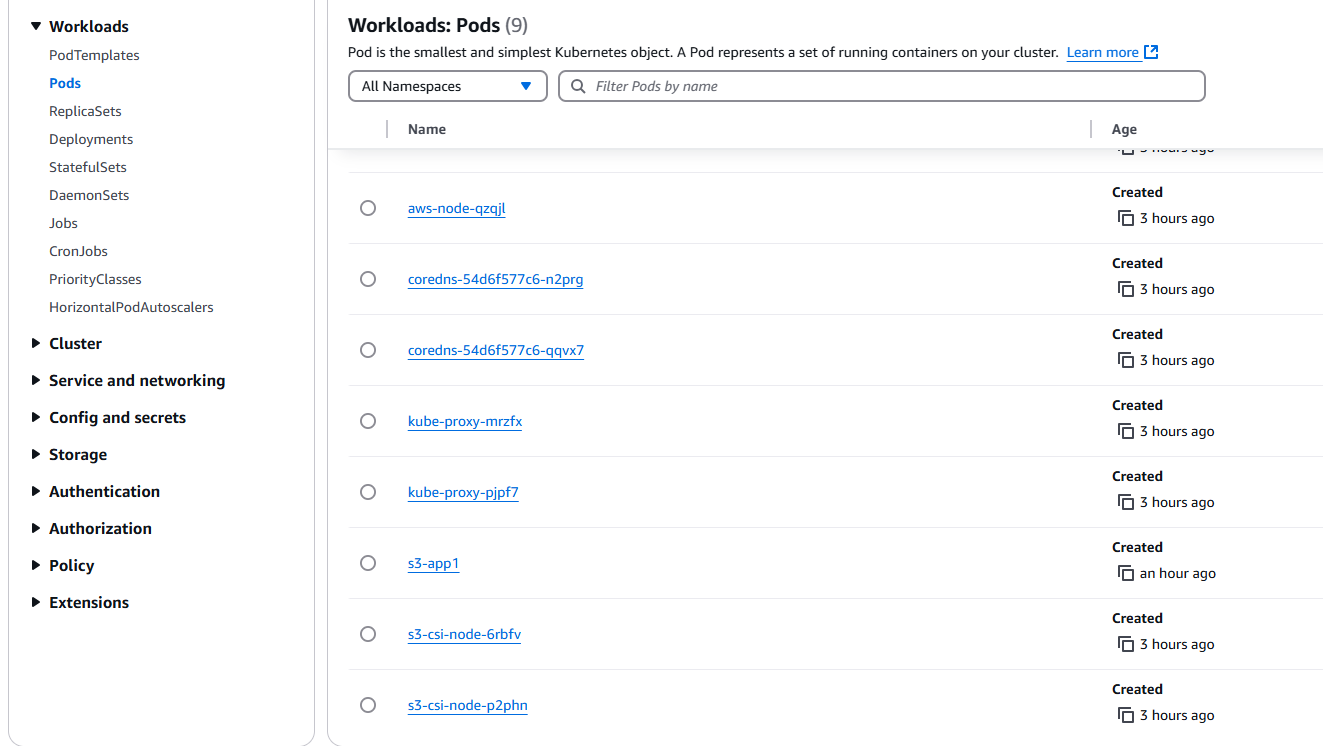

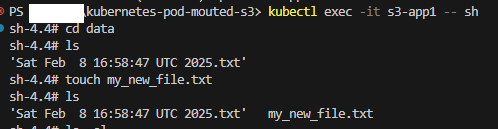

Once the pod was running, I created an SSH session to the pod and uploaded a file locally.

This showed up in my global S3 bucket immediately, showcasing a scalable and distributed storage system that could be shared across multiple pods!

Key articles that helped me troubleshoot issues:

https://foxutech.medium.com/how-to-troubleshoot-kubernetes-pod-in-pending-state-8e18a3732132

https://vmninja.wordpress.com/2021/08/10/kubernetes-volume-x-already-bound-to-a-different-claim/

Some of the highlights…

IAM permissions:

New S3 bucket:

EKS Cluster:

Service account:

Mounted cluster:

K8 Pod creation:

VPC resource map:

S3 CSI driver:

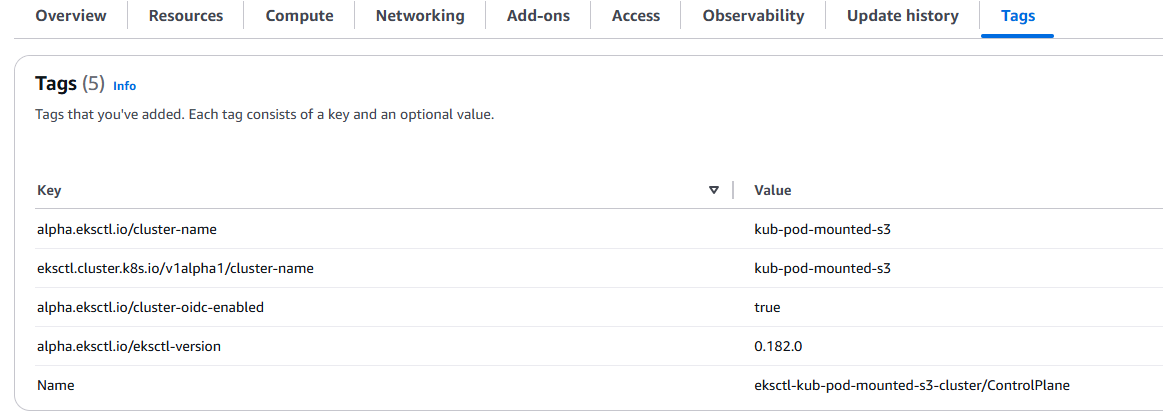

Resource tagging:

Resources online:

SSH connection:

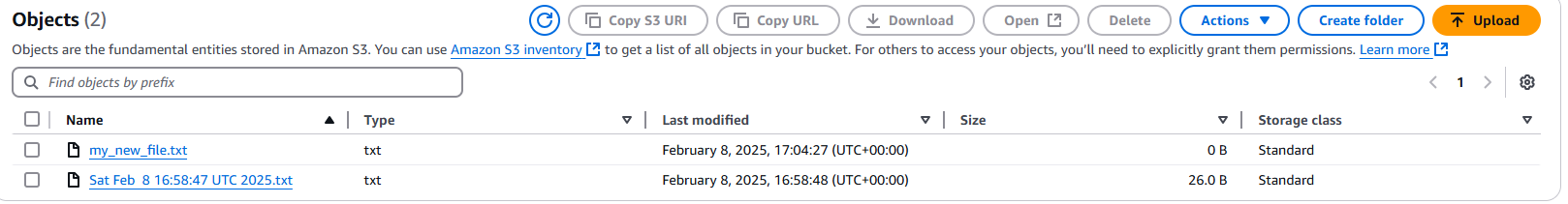

Populated S3 bucket:

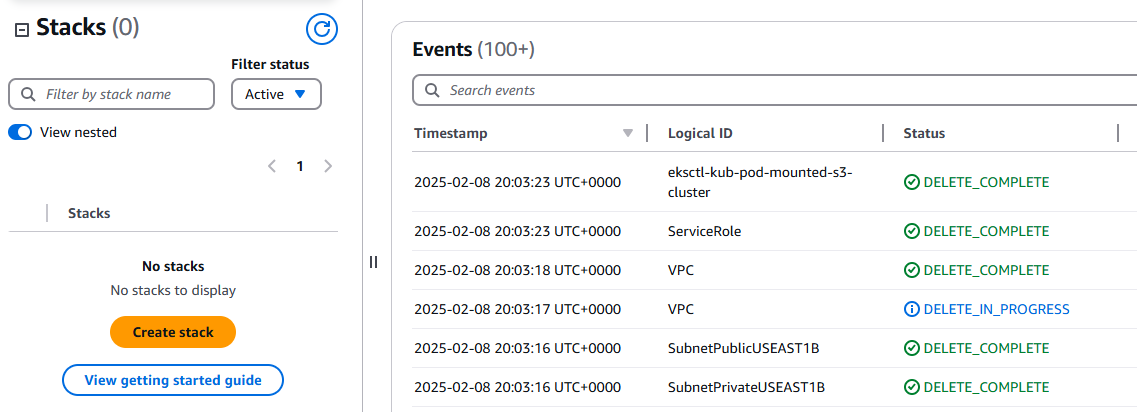

CloudFormation cluster deletion:

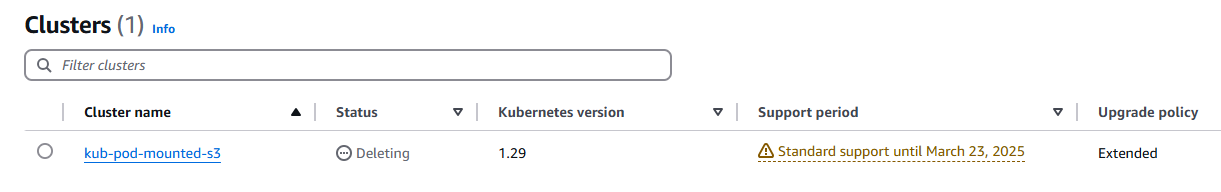

EKS cluster deleting:

Cluster deleted:

My interpretation of the architecture:

I hope you have enjoyed the article!