Building an application on AWS EKS with Fargate using CloudFormation!

Journey: 📊 Community Builder 📊

Subject matter: Building on AWS

Task: Building an application on AWS EKS with Fargate using CloudFormation!

This project practices Availability and Scalability… and perseverance!

Using the 6 Pillars of the AWS Well-Architected Framework, Operational Excellence, Security, Performance Efficiency, and Reliability will be achieved in this build.

I will start by saying that this has been one of the most difficult cloud projects I have worked on to date. I ran into one problem after another.

It was actually almost one command after another failing at one point.

I was unable to complete my planned activity four times before finally getting it right. This involved many hours spent over four evenings to understand the components and overcome the hurdles.

Persevere!

What is important here is that I didn’t give up. I pushed myself to try different ways to achieve my goal and plan on documenting the problems I had and how I fixed them in the coming days in a bid to help others.

This week, well actually also a lot of last week, I built a functioning application on Amazon EKS with Fargate.

For this project, I switched away from Terraform and moved to CloudFormation.

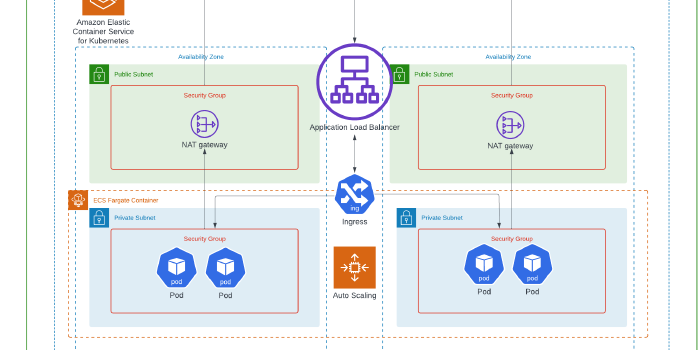

Amazon Elastic Kubernetes Service is a managed Kubernetes service.

Amazon EKS automatically manages the availability and scalability of the Kubernetes control plane nodes responsible for scheduling containers, managing application availability, and storing cluster data.

AWS Fargate can be used with Amazon EKS to run containers without having to manage servers or clusters of Amazon EC2 instances.

In a Kubernetes cluster, there are 2 components. They are the Control Plane, known as a Master Node, and the Data Plane, known as Worker Nodes.

In this environment, EKS handles and manages the Control Plane and Fargate manages the Data Plane.

Resource credit: This IaC architecture was created using some guidance from Victor Okoli on Medium Here.

In total, I probably used over 50 websites/forums to troubleshoot and complete the build.

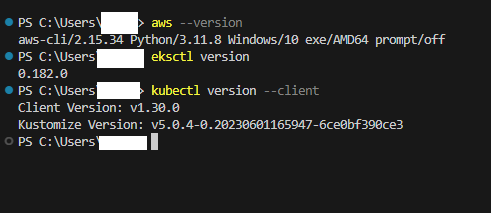

What did I use to build this environment?

- AWS CLI

- Kubectl CLI

- Eksctl CLI

- AWS Management Console

What is built?

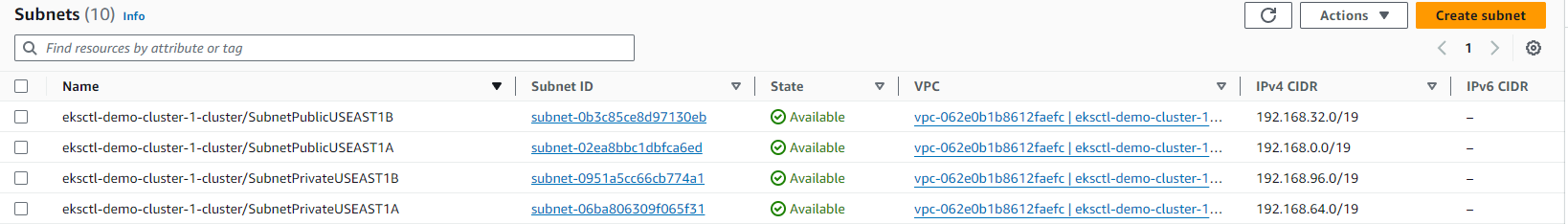

- A single VPC

- Public and Private Subnets

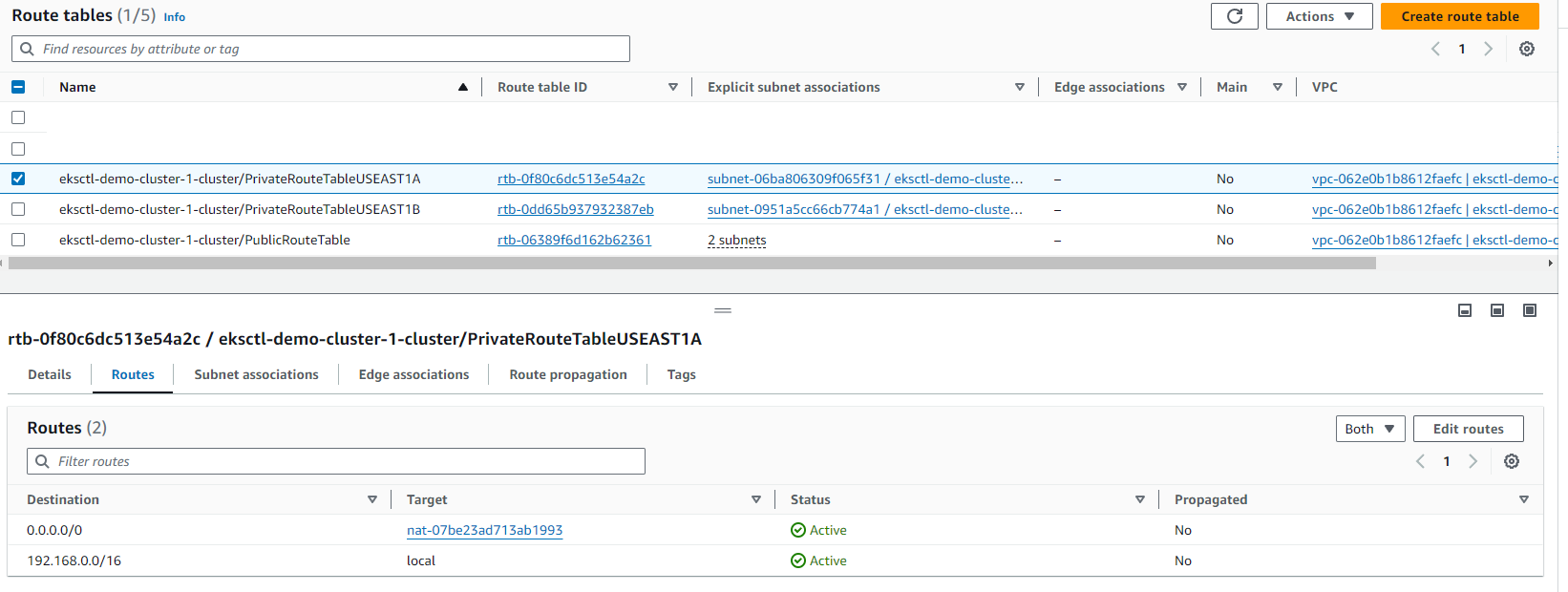

- Custom Route Tables

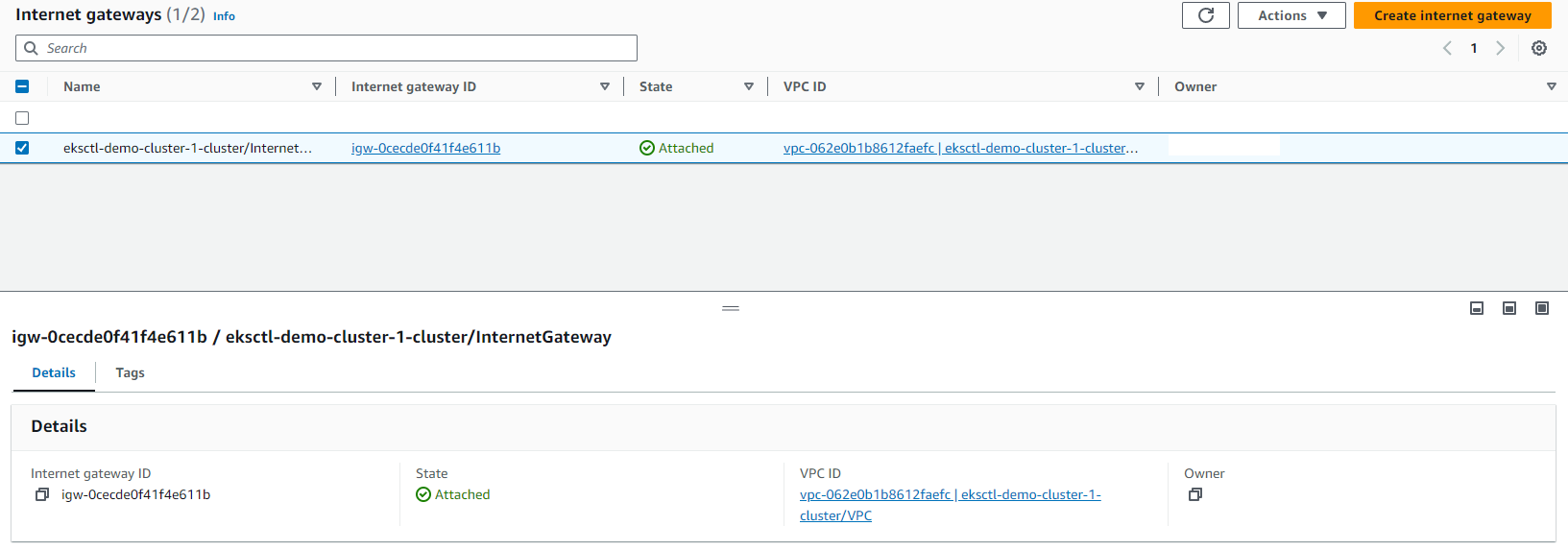

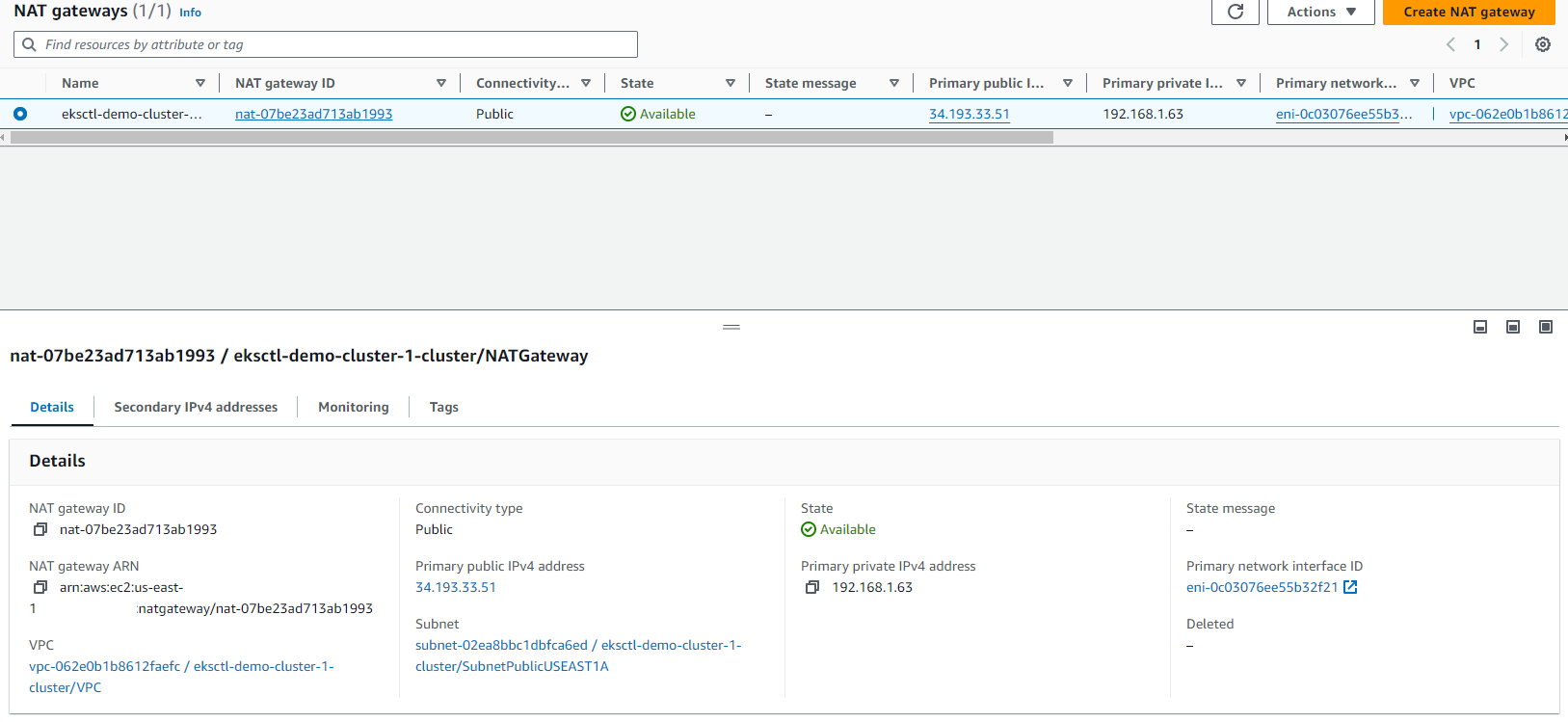

- Internet and NAT Gateway

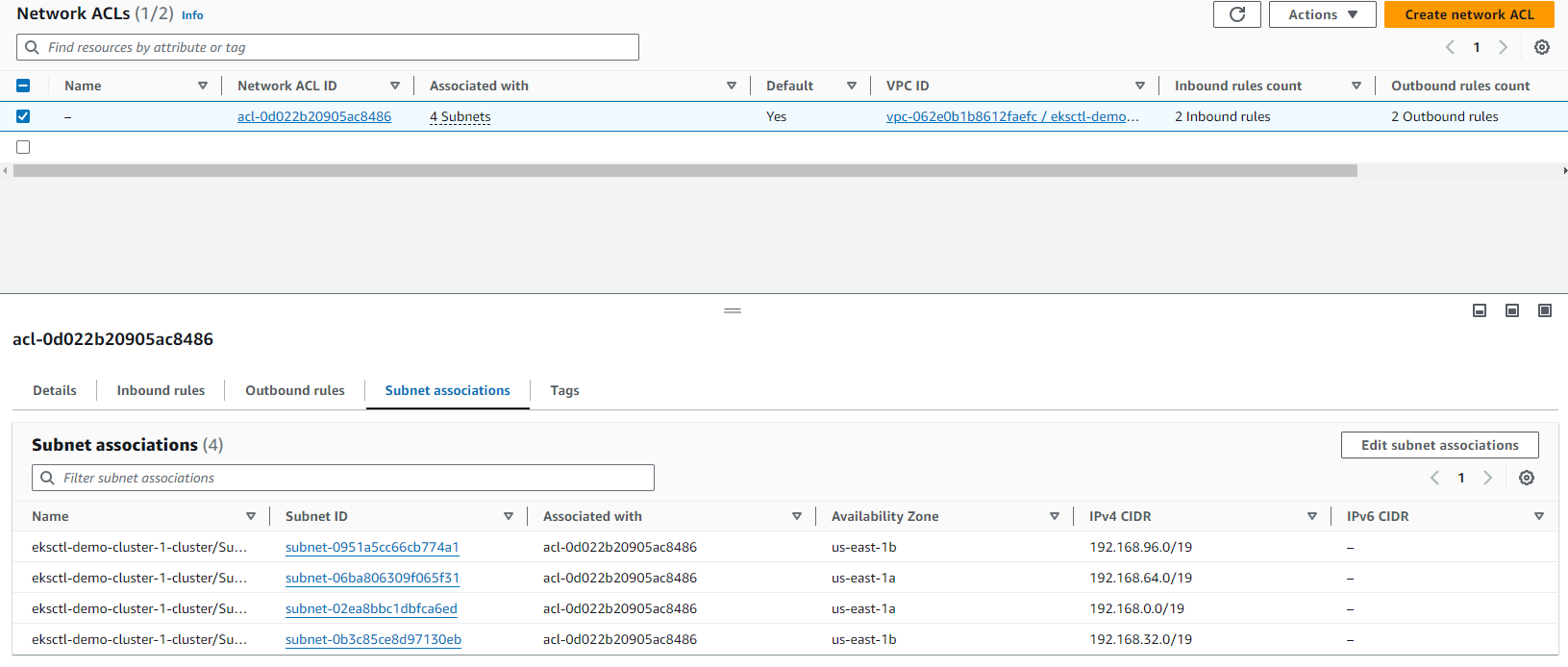

- NACLs and Security Groups

- An Amazon EKS Cluster

- An AWS Fargate deployment

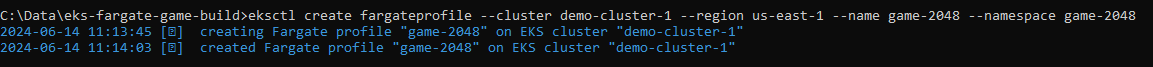

- AWS Fargate Profiles

- An Ingress Controller

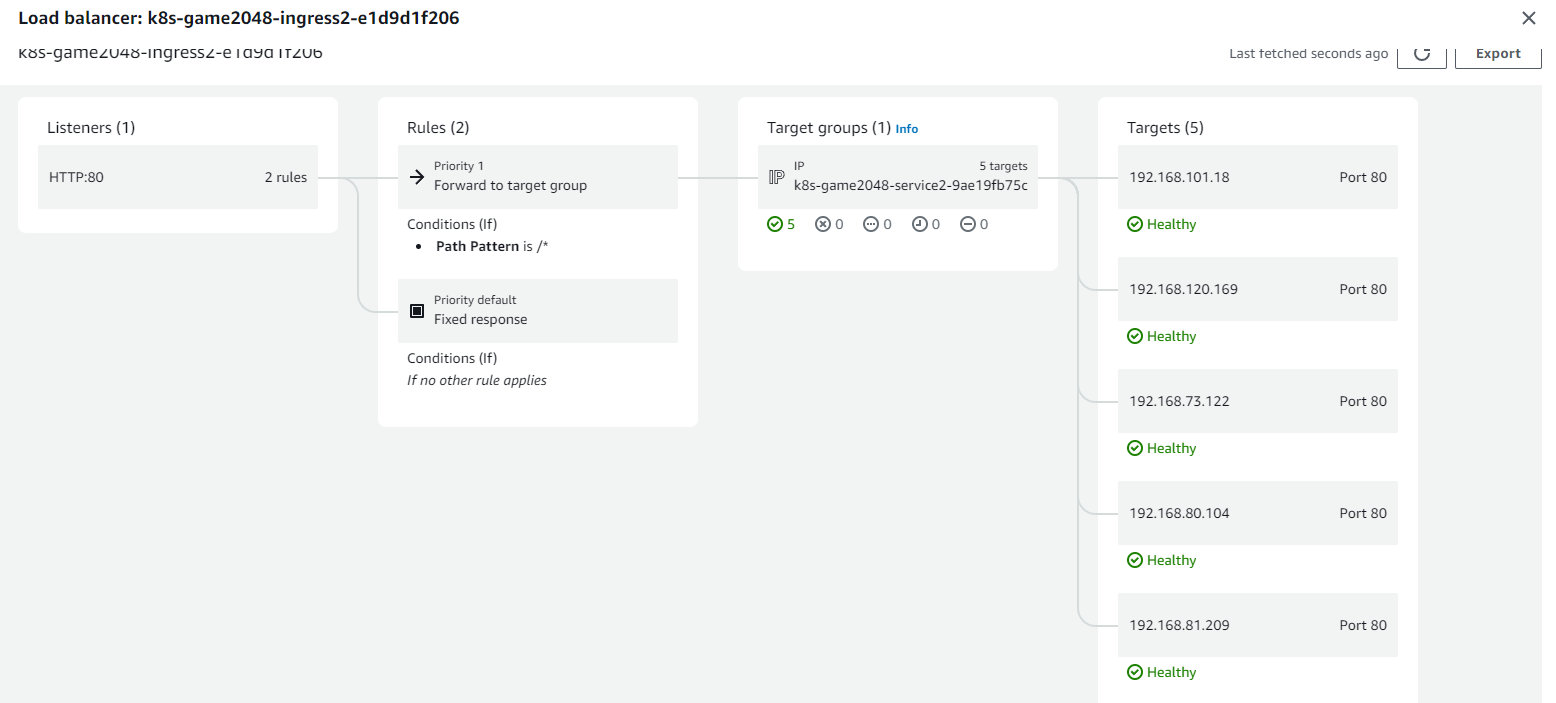

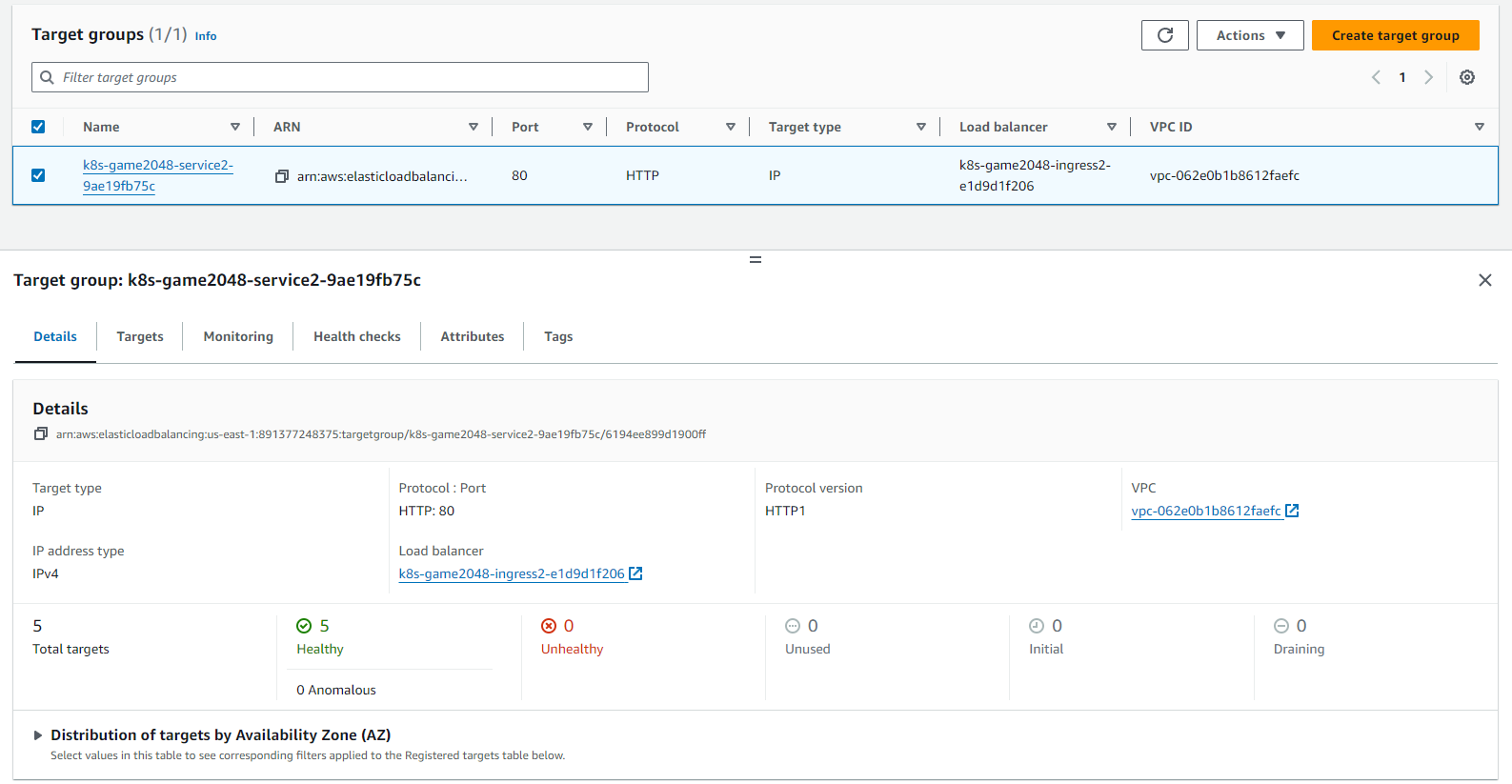

- An Application Load Balancer [ALB]

- ALB Listeners

- ALB Target Groups

- IAM OIDC permission laydown

More information on EKS and Fargate can be found here: https://docs.aws.amazon.com/whitepapers/latest/overview-deployment-options/amazon-elastic-kubernetes-service.html

https://docs.aws.amazon.com/eks/latest/userguide/fargate.html

Also, the following documentation helped me set up the environment:

https://docs.aws.amazon.com/eks/latest/userguide/install-kubectl.html

https://docs.aws.amazon.com/emr/latest/EMR-on-EKS-DevelopmentGuide/setting-up-eksctl.html

https://docs.aws.amazon.com/eks/latest/userguide/enable-iam-roles-for-service-accounts.html

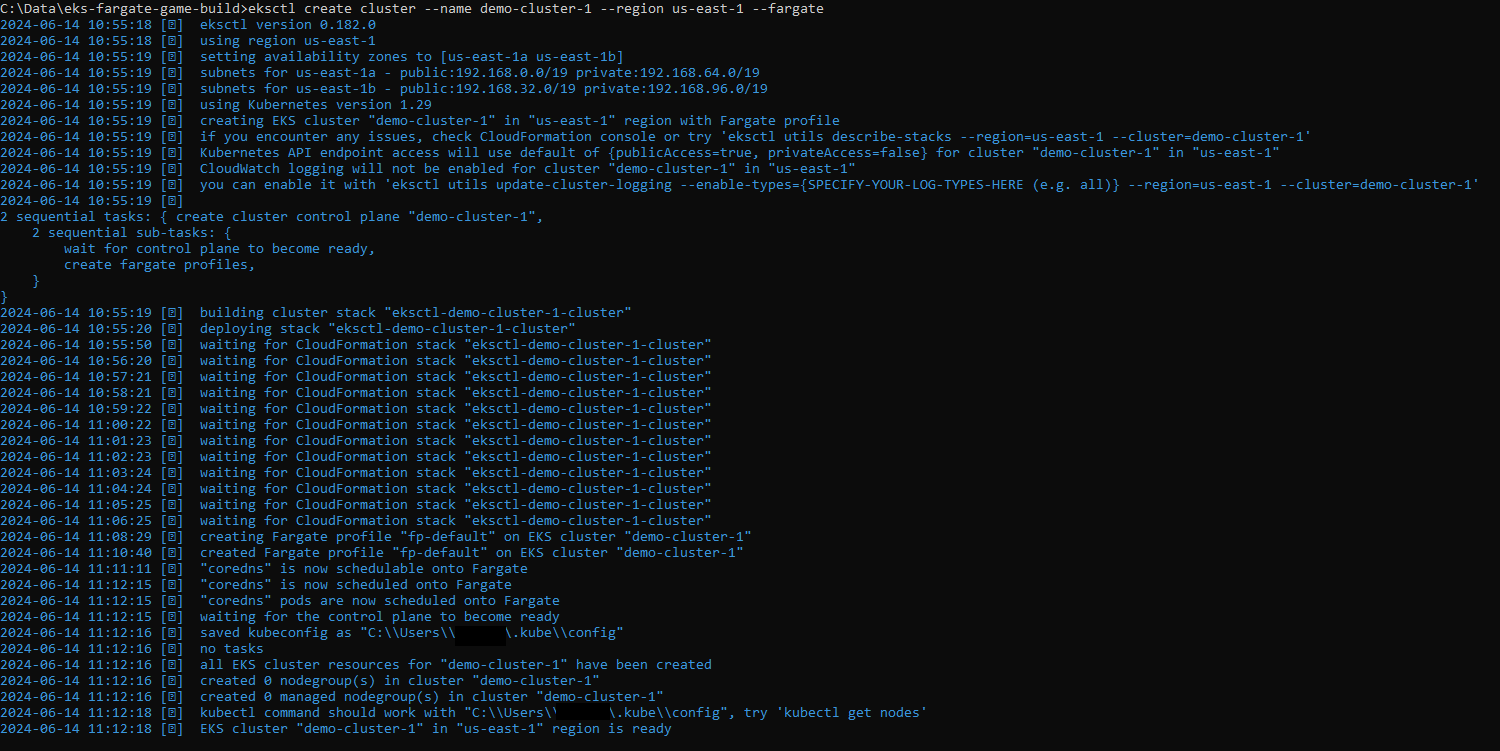

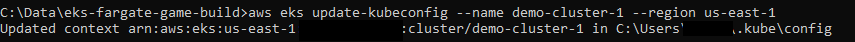

In this task, I used Cloud Formation to create an EKS cluster and set a flag to deploy Fargate with it. After updating the Kubernetes configuration, I deployed a Fargate profile.

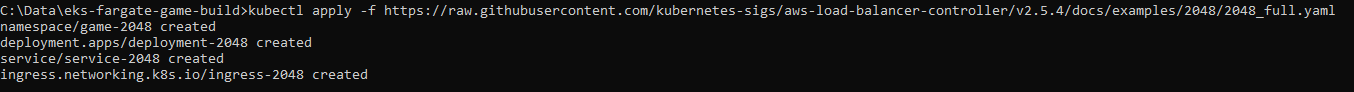

Once I completed this, I deployed the configuration related to deployments, service, and ingress via a YAML file, which creates and updates the resources in the cluster.

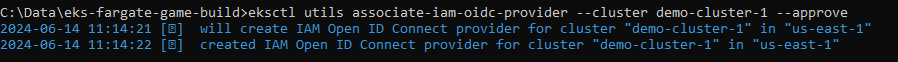

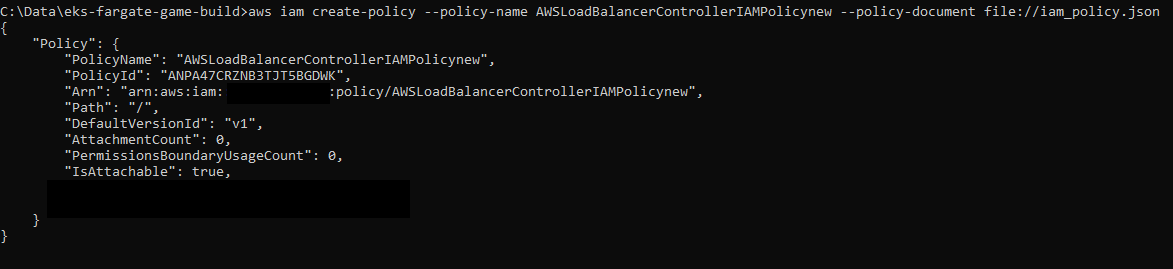

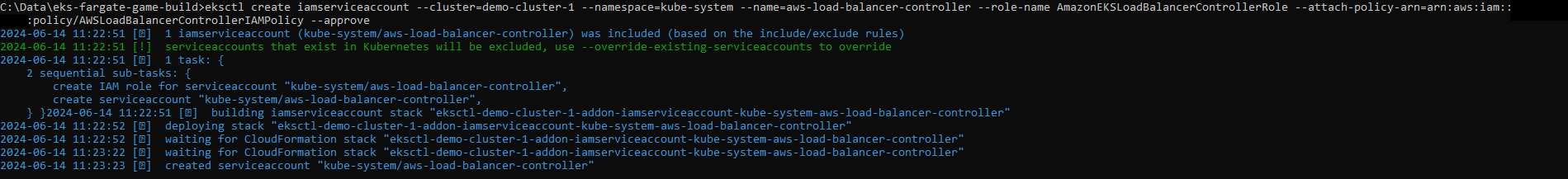

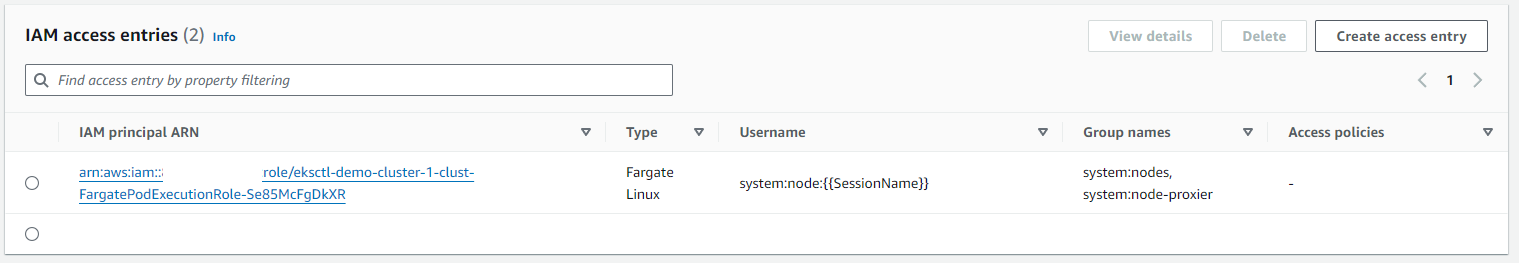

I then associated the OIDC Provider with my new cluster and created a new IAM Policy and IAM Service Account. I then attached the Role to the Policy.

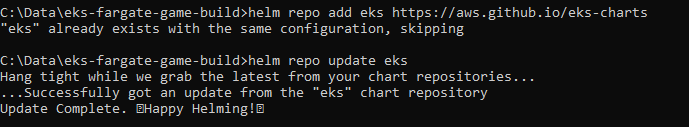

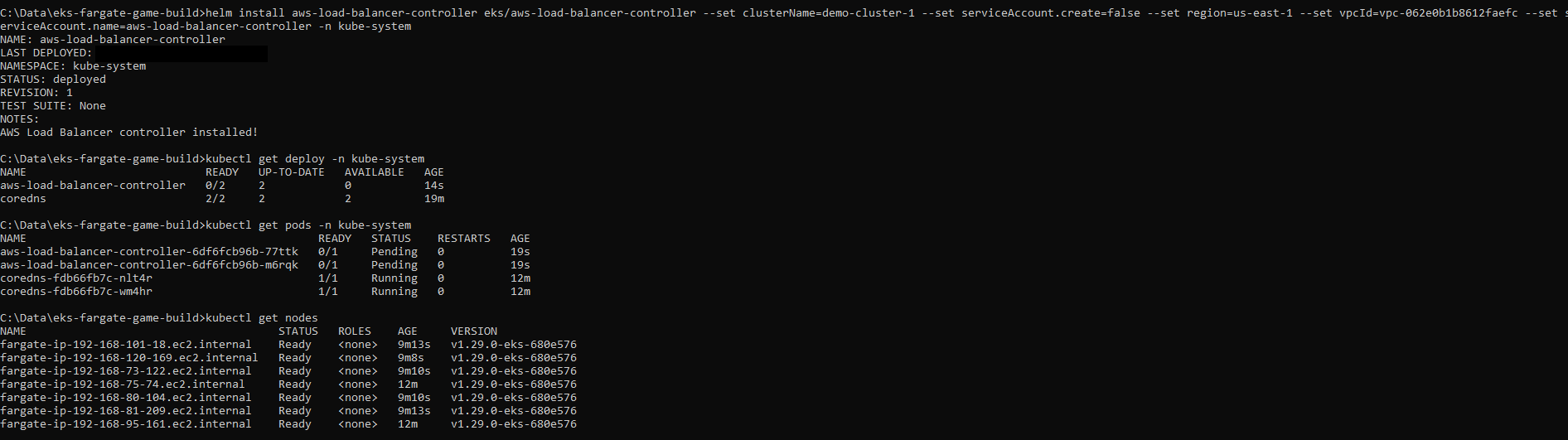

I used Helm Charts to run the controller and also used this service account to run the pods.

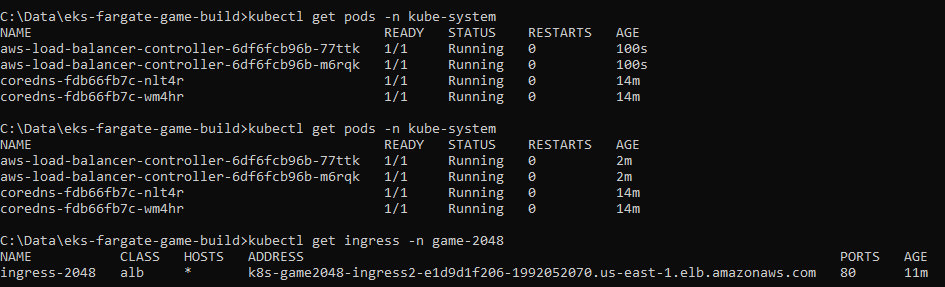

After validating that the pods and services were running, I used Helm to deploy the Load Balancer Controller against my VPC and assigned the service account so the configuration could be managed.

I monitored the Load Balancer pods coming online and once they were available and stable, I ran a command to check that the ingress controller had assigned a DNS name. This indicated that the Load Balancer was ready to serve traffic to the application.

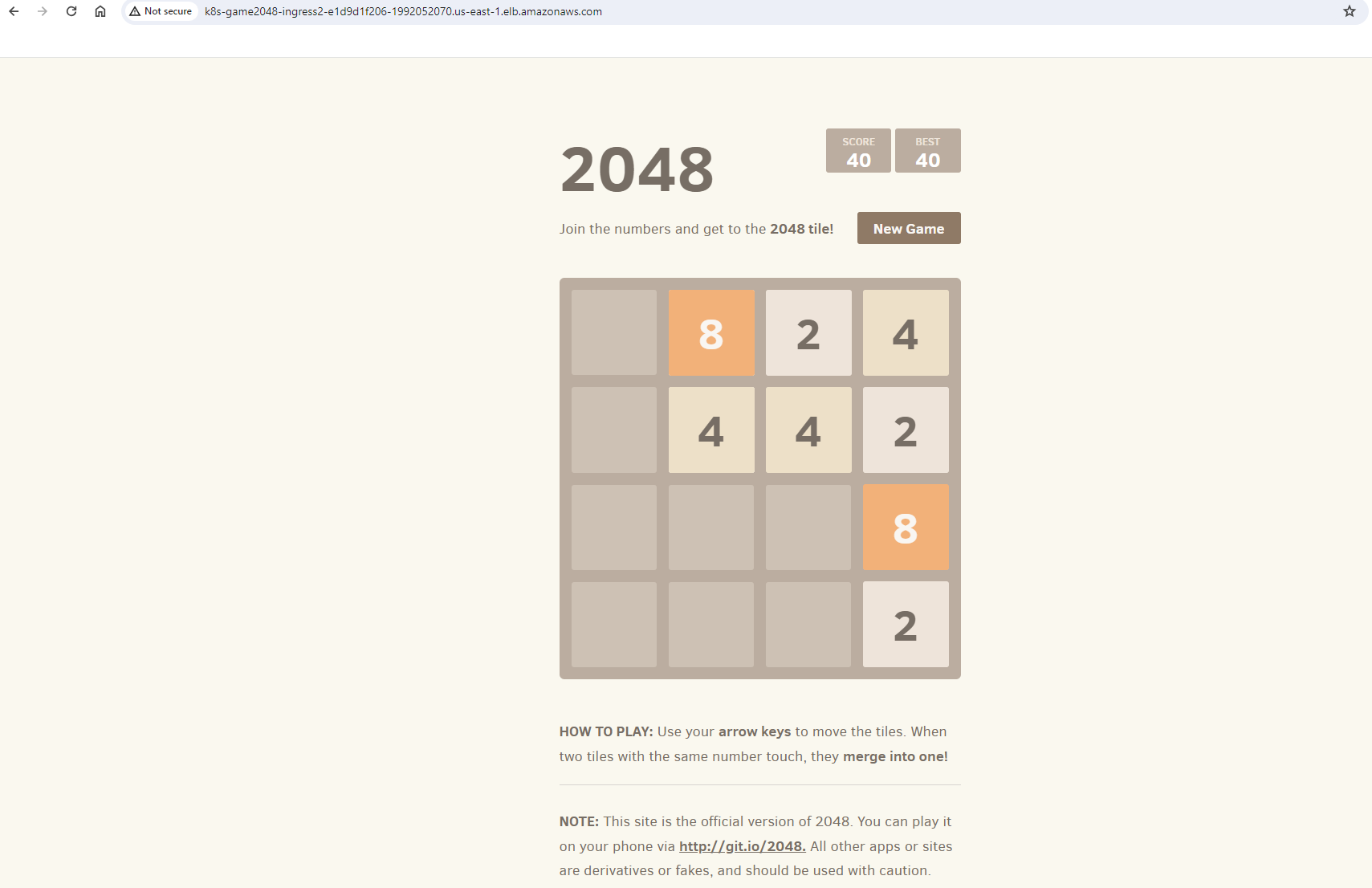

Finally, I loaded the game via the DNS name and had a go!

Once I had finished playing around with the new app, I was ready to tear it down again. To do this, I simply removed the Fargate profiles that had been created in my EKS cluster, deleted the Load Balancer, and then used CloudFormation to delete the VPC stack.

Overall, given that I managed to get it running on the fifth attempt, I was pretty pleased with my perseverance in working with Kubernetes! I learned a huge amount from all of the troubleshooting. As the adage goes, “You learn the most from the times it didn’t go to plan!”

Some of the highlights…

CLI Setup:

Cluster create:

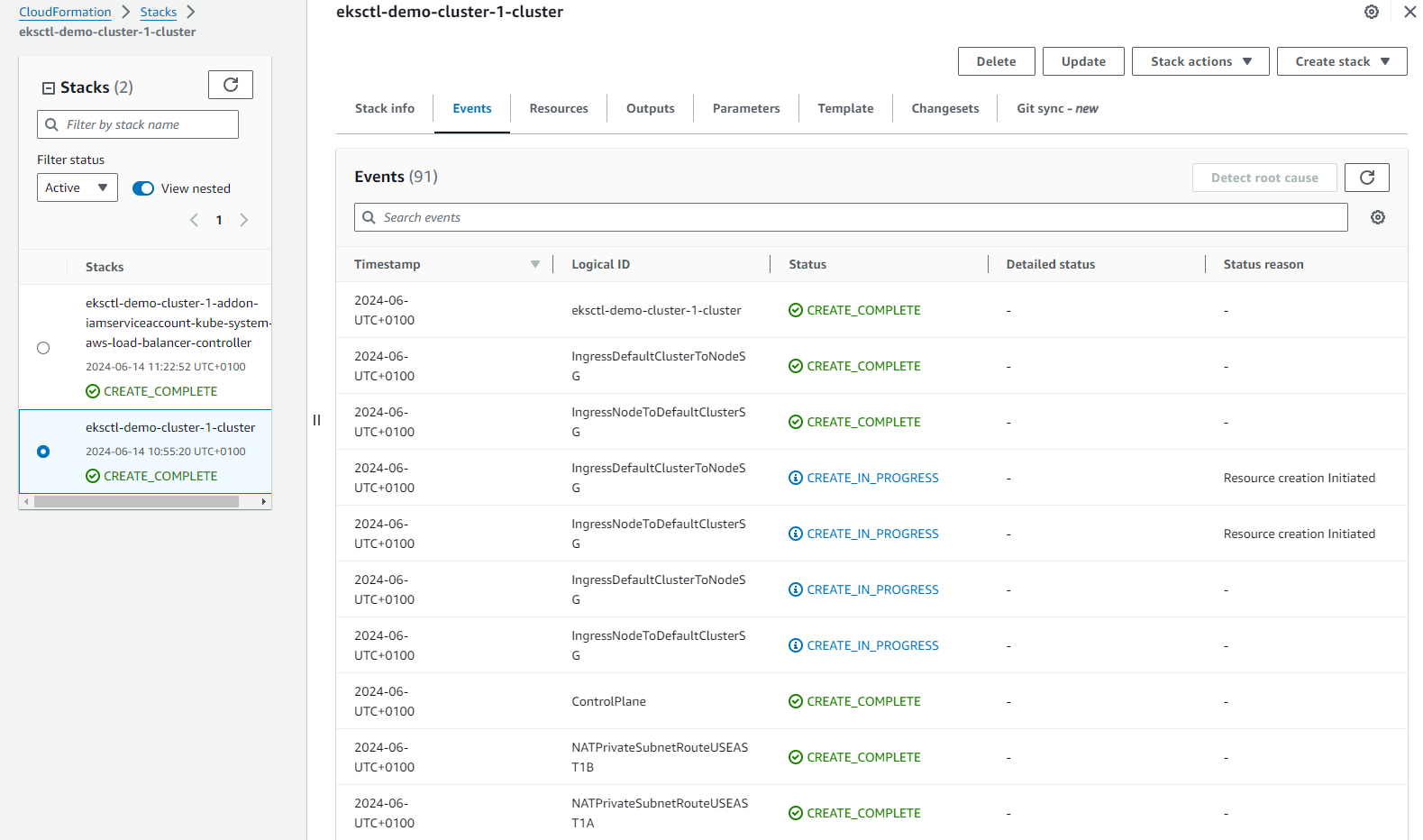

CloudFormation completion:

Cluster online:

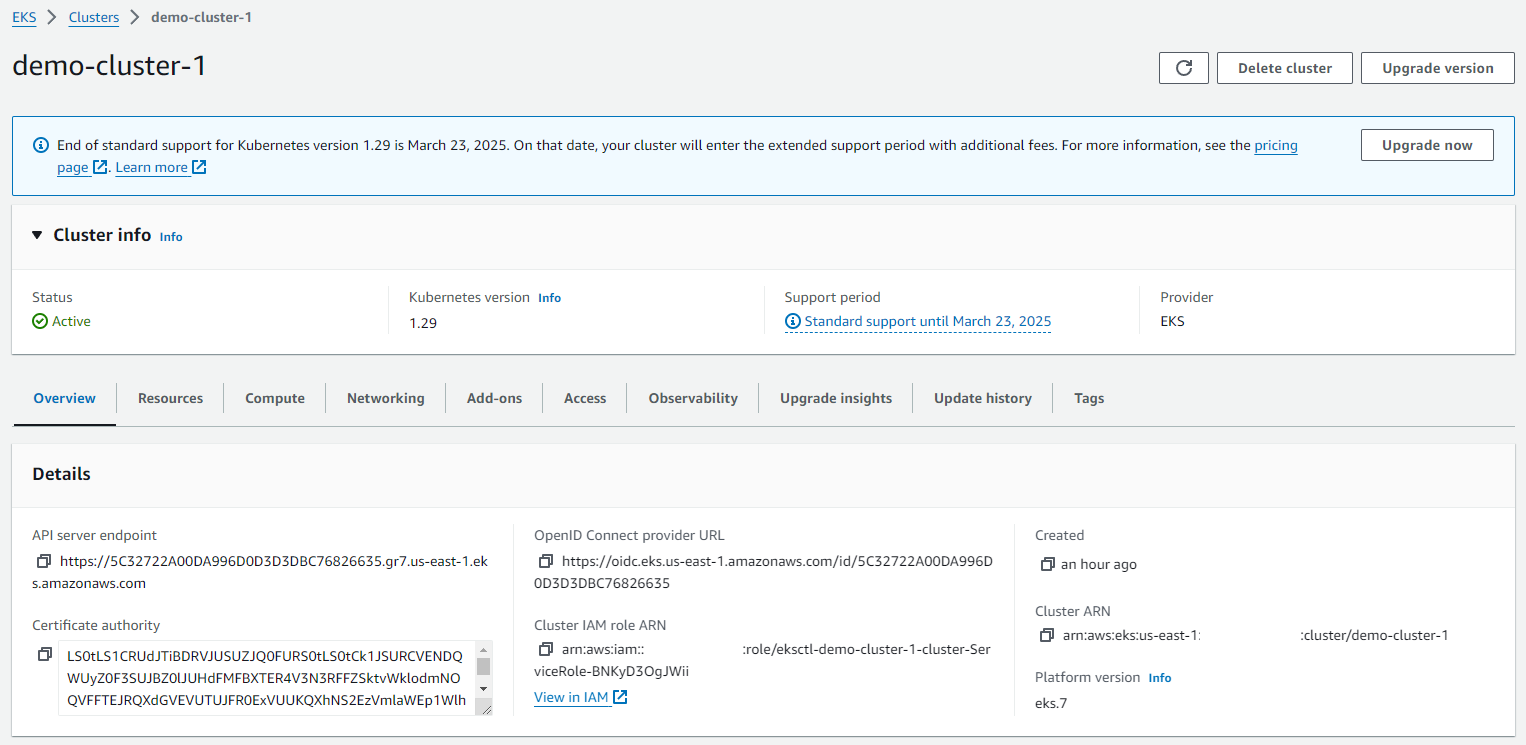

Cluster pods online:

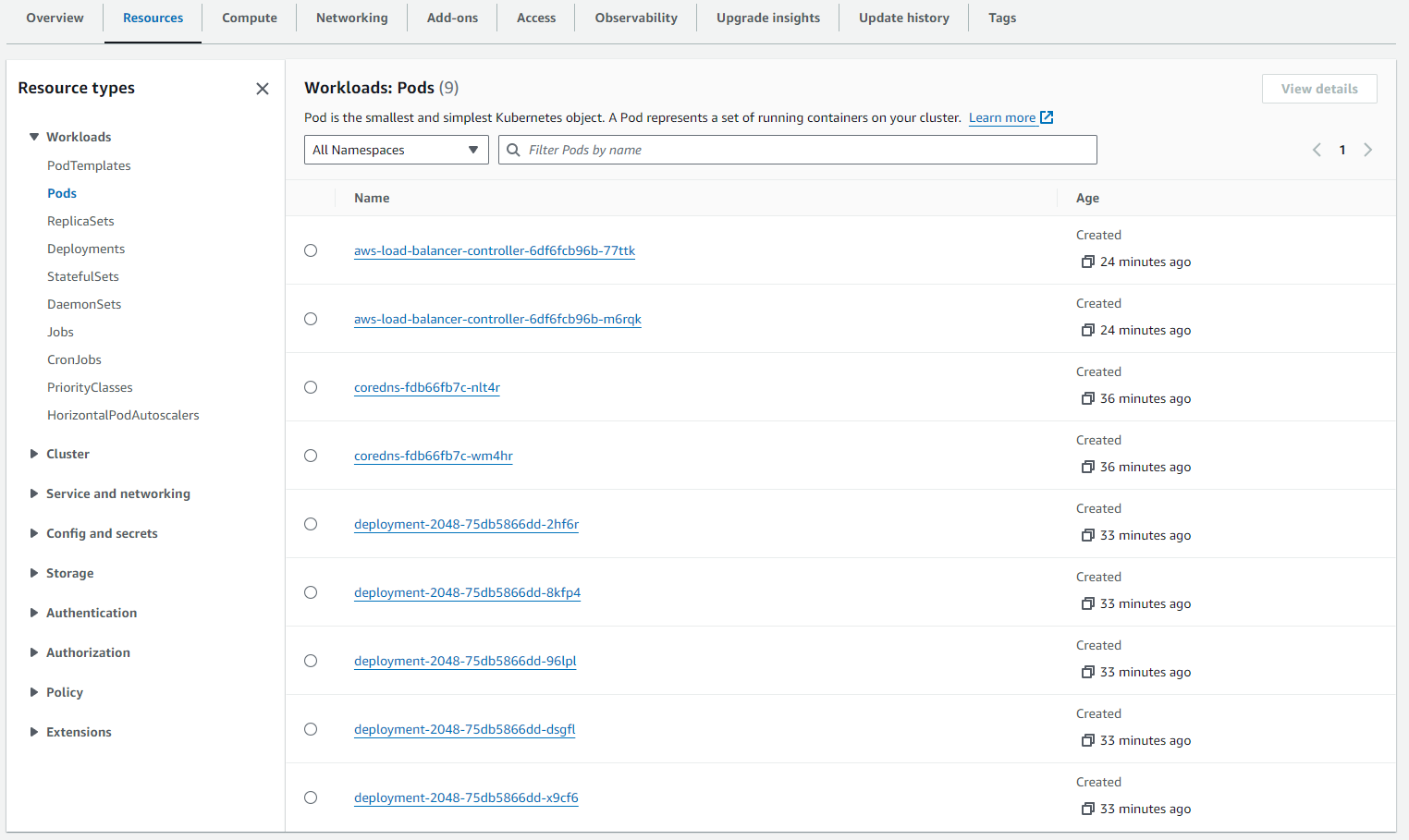

VPC:

Subnets:

Route tables:

Internet gateway:

NAT Gateway:

Security Groups:

NACLs:

Kubernetes config update:

Fargate Profile:

Fargate Profile GUI:

Kubectl YAML Config:

OIDC Connection:

IAM Policy creation:

Helm update:

IAM Role assign:

IAM Role GUI:

Load Balancer being deployed:

Load Balancer online:

Load Balancer resource map:

Load Balancer target groups:

Application working, first go!:

Best score!:

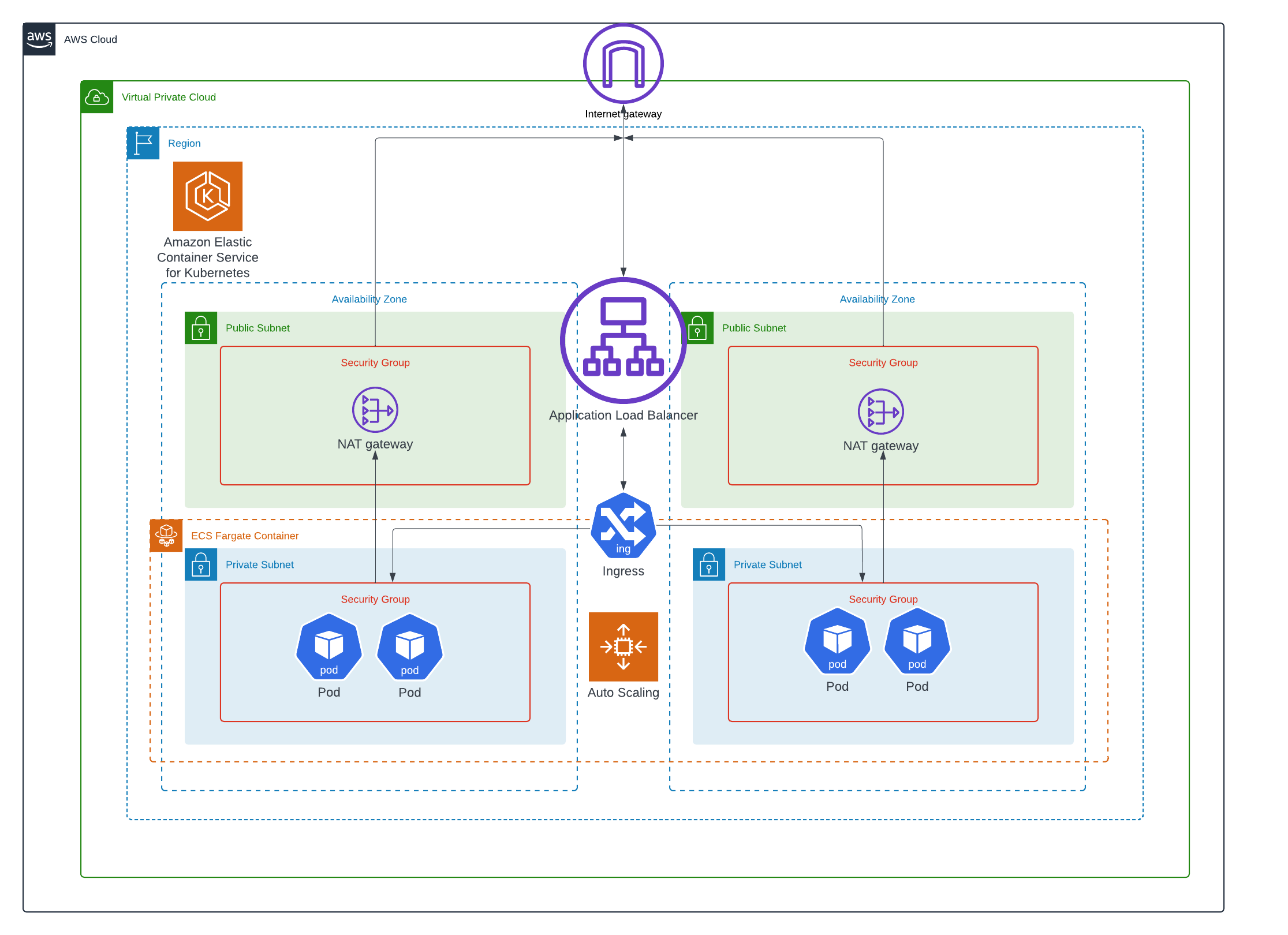

My interpretation of the architecture:

I hope you have enjoyed the article!