Troubleshooting an EKS Pod creation error - Persistent Volume Controller Failed Binding

Journey: 📊 Community Builder 📊

Subject matter: 💡 Troubleshooting 💡

Task: Troubleshooting an EKS Pod creation error - Persistent Volume Controller Failed Binding!

While working on a project using CloudFormation to build out an EKS Cluster and then mount an S3 bucket into a pod using the Container Storage Interface, I hit a problem as my pod came online and thought I would document the fix here.

Problem observed:

After creating the cluster successfully, when I was running the kubectl apply to create my EKS Pod, three configuration items were required within the yaml file:

- A Persistent Volume (PV)

- A Persistent Volume Claim (PVC)

- The application pod itself (App)

Although everything was created successfully, the Pod was stuck in a Pending state and would not come online.

When running a kubectl get pod <POD_NAME>, I observed the following:

Status: “Pending”

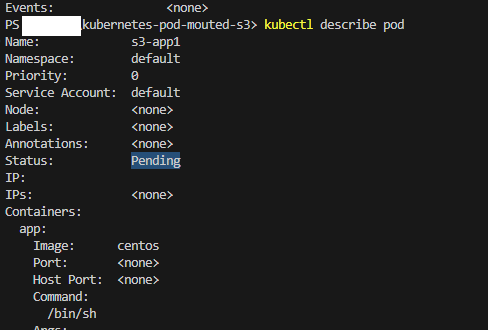

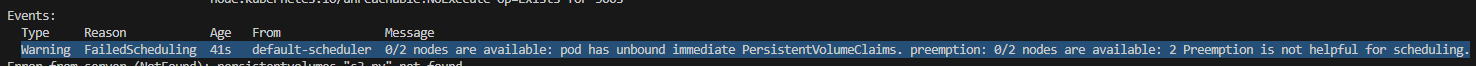

When running the command ‘kubectl describe pod <POD_NAME>’, I observed the following:

“default-scheduler 0/2 nodes are available: pod has unbound immediate PersistentVolumeClaims. preemption: 0/2 nodes are available: 2 Preemption is not helpful for scheduling.”

Investigations:

I started troubleshooting the issue and ended up reading what felt like 1000 blog and stack overflow articles to try and fix it!

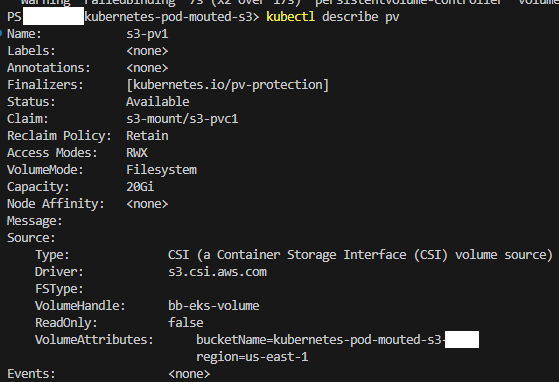

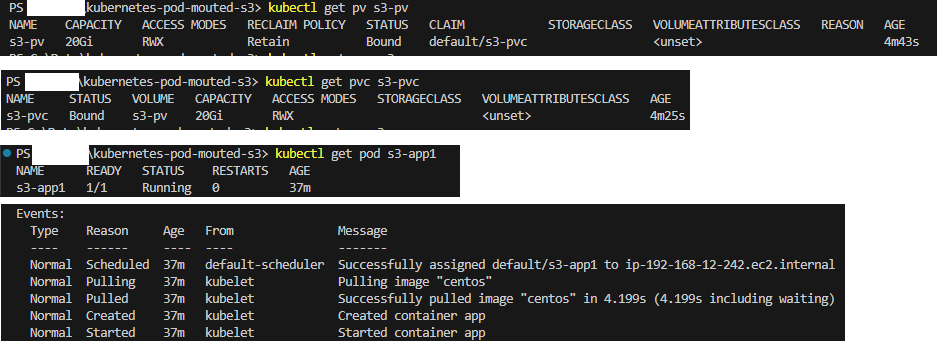

My Persistent Volume was online:

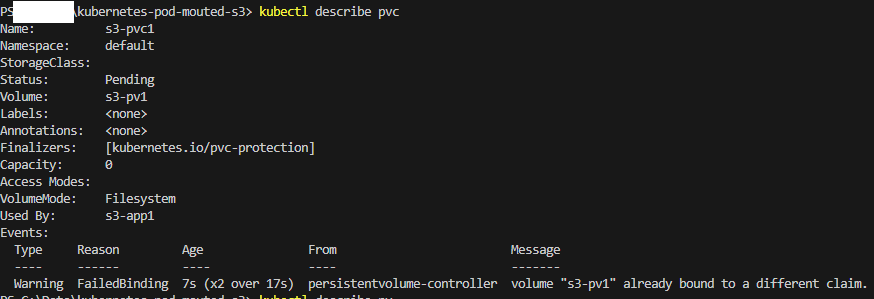

However, my Persistent Volume Claim was complaining about the Persistent Volume already being bound to a different claim.

“volume already bound to a different claim.”

The PV already thought it had a claim from the same PVC as it had called it early in the config set.

All of my config was sitting in one yaml file. When I executed the kubectl apply command, it did everything in one go. I needed to split it all down into three yaml files to find where the problem started.

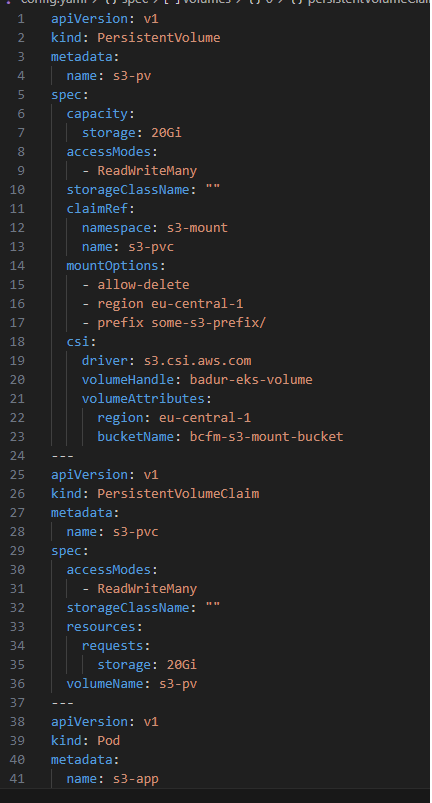

This is an example of what my file looked like:

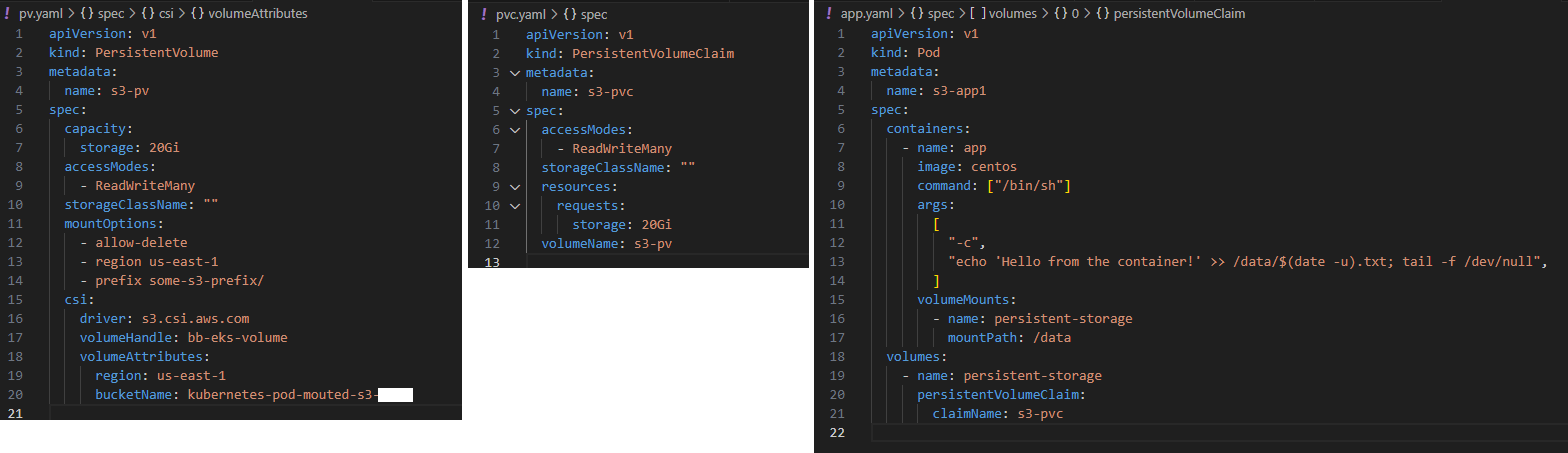

I made three files, a PV, PVC, and an App yaml file, and split the config down. This helped me a lot!

Cue what seemed like hours of reading through blog after blog to understand the construct of the problem better and I finally cracked it!

Fix required:

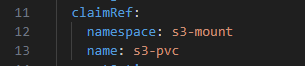

After breaking it all down and running each file manually, I realised that the ‘claimRef:’ code seemed to be introducing a blocker to my PVC binding to the PV so I removed the claimRef config element:

I deleted my Persistent Volume, Persistent Volume Claim, and App and then recreated by running one file at a time and it all came online!!

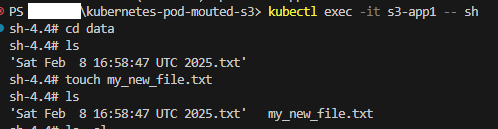

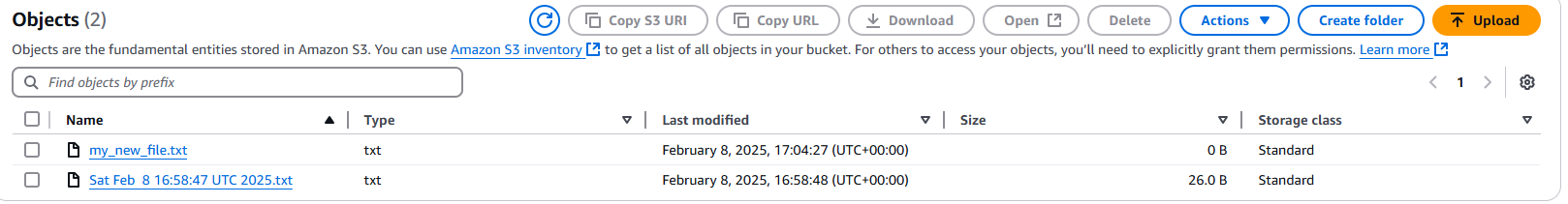

After the pod became accessible, I was able to continue my project plan by opening an SSH session and creating a new file. This file was delivered straight to my S3 bucket, which was mounted directly into my EKS pod using the S3 CSI driver.

If you are interested, you can see that project documented here: https://www.barnybaron.com/gallery/project-kubernetes-pod-mounted-s3/.

If this post helps just one other person, it has served its purpose well!